Writing on Substack

Hello there! It’s been a long time since I’ve posted anything here. A little while back I started writing a bit over on Substack. If you want to check out what I’m publishing there, head on over to:

https://brandonpaddock.substack.com/

I’m not sure if I’ll stick with that or not, and maybe I’ll copy posts back here at some point. But for now, that’s where you can find my latest long-form thoughts/rants/etc.

Tesla Autopilot part 2 – Safety

This post will focus on answering a simple question: Is Autopilot safe? I’ll answer this two ways: first, by describing my experience and my mental model for what it means for something to be “safe”. Second, by looking at some data. One thing I will not attempt to address here are any legal or civil liability concerns.

As background, I suggest reading (or at least skimming) part 1 if you haven’t. I’ll use a number of terms (e.g. TACC, AutoSteer, etc) which are defined in that post.

TL;DR: I believe Autopilot is safe when used responsibly, and that overall the Autopilot convenience features have little effect on overall vehicle and road safety (in either direction).

What does it mean to be “safe”?

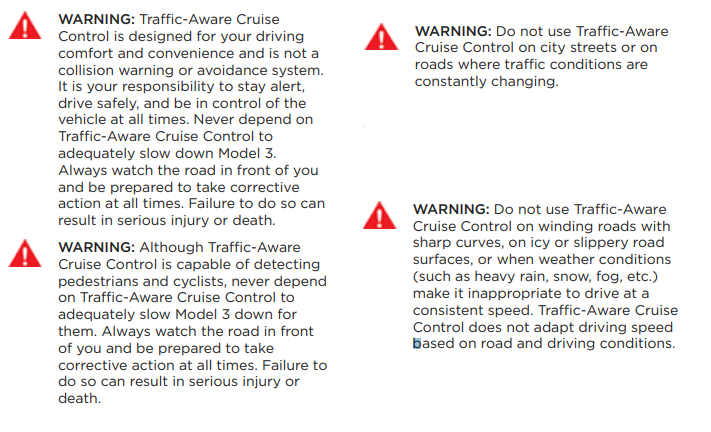

I think Autopilot is safe in the way that cruise control is safe – it isn’t completely without risk, but if used responsibly the risk of it causing a serious problem is minimal. In fact, I believe that Autopilot’s TACC (Traffic Aware Cruise Control) is inherently safer than regular cruise control, and AutoSteer can make you safer in certain situations – e.g. cases where you might have been distracted anyway (e.g. fiddling with the radio or reaching for some gum). All current Autopilot capabilities fall under the umbrella of an Advanced Driver Assistance System, or what the SAE defines as “Level 2 – Partial Driving Automation”. In short: just as with cruise control, you are driving – the automation is just helping you.

For drivers who misuse the system, or those who actively abuse it, the question gets more complicated. I consider misuse to be anyone who doesn’t pay attention or ignores warnings and guidelines about where, when, and how to use or not use the system. Misuse can arise due to misunderstanding of the system or ignorance of its limitations. Some have argued that misuse is inevitable, perhaps due to automation fatigue. Others have argued that Tesla encourages misuse or fails to adequately discourage it. In my opinion they generally do a good job of discouraging misuse and communicating through the product itself how it should or shouldn’t be used. Unfortunately certain aspects of their marketing, some statements from a particular CEO, and the overzealous claims of some ardent fans are not always well-aligned with the product truth. At the very least, there is room for improvement here.

I consider abuse of the system to cover any attempts to circumvent the safeguards of the system (e.g. by using a device to stop the “nags”), or any deliberate attempts to operate the system in a manner other than what is intended.

Some will point to YouTube videos or social media posts depicting misuse or abuse of Autopilot as evidence that there is a problem here. However, I think it is important to view these examples in context. These videos tend to be stunts, most often done to garner attention (and clicks and thus $$). Some have been debunked as faked. More importantly, these kind of stunts are not unique to Tesla vehicles, Autopilot, or any form of ADAS or automation.

Not sure what I mean? Check these out:

Teen Seriously Hurt in ‘Ghost Ride the Whip’ Stunt When Truck Runs Him Over – YouTube

Woman “Ghost Riding the Whip” into oncoming traffic – YouTube

Ghost Riding The Whip Gone Wrong (No Commentary) – YouTube

Further, Autopilot, and every L2 / ADAS is really an advanced form of “cruise control”. Cruise control itself has been shown to be associated with negative effects on driver attention and behavior (source). Another interesting question worth exploring may be whether Autopilot (or another ADAS) is better or worse in this regard than the “dumb” cruise control found on very many vehicles for decades.

Net safety impact is complicated

One of the reasons it’s difficult to come up with a clear consensus answer about the safety of Autopilot (or any ADAS really) is that any such system can and almost certainly does have a mix of both positive and negative safety impact. The real challenge is determining the overall “net” effect.

For example, two difficult to balance considerations are:

1) An irresponsible driver who is going to fall asleep at the wheel on a given drive is undoubtedly much safer if they are driving with Autopilot (Autosteer, or Nav On AP) enabled. The same could probably be said for an intoxicated or otherwise impaired driver.

2) The existence of AP could result in irresponsible drivers attempting to drive when tired or intoxicated more than they otherwise would have.

Of course, in the first case the use of Autopilot absolutely does not make those conditions safe, you should absolutely not drive with or without AP if you are too tired or have been drinking. However, I think there is a convincing argument that it does likely make these situations safer. If the number of incidents of people who drive in these compromised states were fixed, it would be reasonable to suggest that the addition of AP is a clear safety win.

Unfortunately, consideration #2 is where it gets really complicated, because if the mere presence of Autopilot somehow increases the chances of someone trying to drive when they’re too tired or impaired, then it would very obviously have a negative effect on road safety.

If you assume the answer is that both are true, now you’re in the position of having to determine which effect is greater, and perhaps considering some ethical or at least philosophical questions around the morality of causing harm to avoid a greater harm (as illustrated in at least some version of “the trolley problem”). One way to do this is by observation and statistical analysis. We’ll look at that a bit more later in this post.

Tesla’s responsibility

As I said earlier, I’m not going to wade into any legal liability or regulator discussions here. However, that doesn’t preclude me from discussing my view of Tesla’s moral responsibilities here.

Two particular questions that arise are:

- Is it Tesla’s fault if someone misuses or abuses Autopilot?

- Is Tesla ensuring that the net safety impact of Autopilot is positive, and how are they measuring it?

People have different opinions about the former, but I am firmly in the “no” camp. If Tesla ever did anything to encourage that behavior, that would be a different story, but to my knowledge they have not. Further, they make it very clear that AP does not make a vehicle autonomous, and that drivers are responsible for their vehicles. However, I admit that this is not a clear cut issue. I would put forward that it’s possible to encourage Tesla to do more (particularly with specific, practical suggestions!) without faulting them for not having done whatever you’re suggesting in the past. This is a new and uncertain area, and while we should absolutely require due diligence and the highest regard for safety from the start, we cannot expect perfection, and should encourage learning and improvement.

One such suggestion I have made is to require drivers to complete a short training program before using Autosteer or other Autopilot features beyond the basic Traffic Aware Cruise Control. I think even a 5-10 minute video followed by a 3-5 question quiz could go a long way to ensuring that drivers understand the limitations of the system and the intended use of the various modes. The video should show examples of things that can go wrong – e.g. the system failing to recognize a stopped vehicle protruding into the road, and show that the driver must handle this or they will crash. The quiz could ask questions like “Is it acceptable to operate a smartphone (e.g. texting) while using Autopilot?”, and “True or false: Autopilot will always come to a stop if an obstacle such as a stopped vehicle is in your path”. If you answer any incorrectly, you have to watch the video again before you can retry the quiz.

The second question is equally complicated but in different ways. For one, it’s unclear how to weight positives versus negatives. If driving deaths go down by 5%, but accidents go up by 10%, is that a positive or negative overall outcome? If 10 accidents occur because of AP which you knew otherwise wouldn’t have, but 10 equally bad accidents which would have occurred are avoided because of AP, is that a wash? What if the numbers are 9 and 11? 1 and 19? 5 and 50,000?

Of course, those are all hypotheticals. It’s not feasible to ever have exactly that kind of data. So what kind of data could we get? Well, given my experience I’m drawn to the trusty Randomized Control Trial as a way of measuring effects and establishing the likelihood of causality. However, that approach isn’t practical here, so what’s the next best thing? Well, that would require data we don’t have – but that Tesla does. Unfortunately they don’t currently share it. So let’s look at what they do share, and what NHTSA publishes, to see what we can learn from it.

Tesla’s Vehicle Safety Reports

Tesla provides voluntary quarterly Safety Reports which provide a few interesting data points about the fleet. Primarily they focus on a particular metric: miles between accidents. They provide this number for a few slices of their fleet:

- Miles driven in which drivers had Autopilot engaged

- Miles driven where Autopilot was not engaged but Tesla’s active safety features (powered by the Autopilot technology) were enabled

- Miles in which Tesla vehicles were driven without Autopilot and without their active safety features.

In Q3 2020 (most recent available when I last went through this data exercise), those numbers were 4.59 million, 2.42 million, and 1.79 million miles, respectively. They also reference NHTSA data which reported at the time that on average in the US there is an automobile crash for every 479,000 miles of driving.

On the surface, this seems to paint a pretty rosy picture for Tesla. Their vehicles appear to be safer than average without any active safety or assistance features, and safer still when you add in their advanced safety features, and safest of all when Autopilot is in use. That certainly fits the marketing narrative! However, if you’ve ever spent 5 minutes with a data scientist, you might at least have some questions. Unfortunately, Tesla’s reports provide little in the way of further details – no raw data, and only limited definitions for what they even count as miles “with Autopilot active”.

What they do tell us is:

- They are using exact summations for the Tesla vehicle numbers, and are not using a sampled data set

- They count any crash in which Autopilot was deactivated within 5 seconds of the crash event as a crash where Autopilot was active

- They count all crashes where an airbag or other active restraint deployed, which they say in practice means virtually any accident at about 12mph or above

However, some things we don’t know include:

- Is the data from their worldwide fleet? Or US only?

- Are they applying any other filters, such as a minimum version of Autopilot hardware or software?

- What do they mean by “Autopilot active”? As I wrote in part 1, there is no specific feature or mode called Autopilot in the car. Rather, there are TACC (Traffic Aware Cruise Control), AutoSteer, and Navigate On Autopilot. I think most are assuming that Tesla is referring to the latter two modes, but it’s conceivable they’re also including TACC. I would guess that they are not including any Smart Summon usage.

- What makes up the “miles driven without Autopilot and without active safety features” data? This could be limited to older model Teslas which lack the new active safety features, or could be limited to drivers who disable some or all of those features in the vehicle’s Settings app, or some combination of the two.

So what insights can we glean from this data? Maybe not as many as you’d hope. Let’s start by laying out a few hypotheses which we’d like this data to help us validate or falsify:

Hypothesis 1: Tesla vehicles are safer than average vehicles even without active safety features being present or enabled.

Hypothesis 2: Tesla’s active safety features are effective at preventing accidents and thus make their cars safer than they’d otherwise be.

Hypothesis 3a: Use of Autopilot reduces the risk of having an accident.

Hypothesis 3b: Use of Autopilot does not increase the risk of having an accident.

Hypothesis 3c: Use of Autopilot increases the risk of having an accident.

For each of these, we can use a common key metric, and Tesla’s chosen metric seems reasonable enough: miles driven between accidents. You could state it other ways but that’s about as good as any.

Personally, I believe #1 and #2 are true, and I think #3a may also be. But I don’t have data to conclude that any of these are true, let alone to assess the actual difference in our key metric. I’ve now seen multiple very enthusiastic Twitter accounts aggressively defend the most naïve interpretation: that they’re all true and that Autopilot is “ten times safer than a human”. After all, 4.59 million is roughly 10 times larger than the NHTSA’s 479,000 number.

Of course, that’s not actually how any of this works. But why not?

Well, let’s start with hypothesis 1. The naïve reading says that the data proves this true and that Tesla vehicles have about half as many accidents as the average vehicle. But wait, the NHTSA data says it’s specific to the US. The Tesla data doesn’t specify, so I assumed it was worldwide. Clearly that could account for some difference (especially since the US reports 7.3 road fatalities per 1B KM, Canada reports 5.1, and Germany reports 4.2 – source). And that’s just the beginning of what makes these numbers incomparable. The NHTSA data is based on an entirely different kind of reporting – it’s an estimate based on police and insurance reports, and it may even be using a different definition of “accident” (e.g. not all accidents result in airbag deployments).

Further, the NHTSA data shows that accident rates vary drastically by state, road type, vehicle age, and several other dimensions. Teslas are all relatively new, especially the vast majority of those using Autopilot, and they’re much more common in certain areas (especially near cities around the coasts). We can say for sure that Tesla owners are not “average” car owners – they are not representative of the usage patterns or driver demographics seen across all active passenger vehicles in the US. We don’t really have a good way to say exactly how they’re different, but we can have a pretty good idea that these differences mean we should expect that Teslas have fewer accidents for a given # of miles driven, regardless of anything Tesla has done.

In data science terms, we have absolutely no way to control for all of those differences. However, it’s pretty easy to conduct a more meaningful comparison than the one these rabid Tesla fans are doing with the very basic data that Tesla provides in their reports.

In order to do this, I’m leveraging the NHTSA’s Fatality and Injury Reporting System Tool (FIRST). As the name suggests, this database focuses on crashes which result in fatalities or injuries. Indeed, it enables querying of data from the unsampled Fatality Analysis Reporting System (FARS) as well as injury data from the General Estimates System and Crash Report Sampling System, and even some estimated “Property-Damage-Only” crash data. However, some data (e.g. make and model) is only available for fatal accidents, so I’ll lean heavily on that. There are differences here versus the more general accident data Tesla reports. However, as you’ll see below, it provides us with a lot of data we can use to better contextualize Tesla’s numbers.

In considering hypothesis #1, we must look for other explanations of the difference between NHTSA’s 479,000 number and Tesla’s 1.79 million number. If we can rule out all the other explanations we can think of, we’ll have a better basis for believing this hypothesis is correct. I’ll use the FARS data to illustrate that there are other explanations for at least some of the difference between these two numbers.

Since we don’t know exactly how Tesla computed their numbers, I’m forced to make some assumptions. Earlier I pointed out that we don’t know if Tesla’s data is US-specific or worldwide. For the purpose of this analysis, I will focus on data about vehicles and roadways in the US. We also don’t know the mix of vehicle models and model years in Tesla’s data. However, since Tesla first began selling the Model S in late 2012, we can assume that all vehicles in their data set are from no earlier than the 2013 “model year”. Further, we know that Tesla sold more vehicles in 2018 than in all years prior (thanks to the introduction of the Model 3), and that sales have grown since then.

Alternate Explanation #1: Newer vehicles are safer

One possible explanation for Tesla’s numbers being higher, then, is that newer vehicles overall have fewer accidents. To support this assertion, I looked for information about the distribution of vehicle ages on the road in 2019. According to IHS Markit, the average age of light vehicles in operation in the US in June 2019 was 11.8 years. Since we know that most Teslas on the road at that time were 2018 model year vehicles (or 2019 models, but only for part of the year), we can compare the fatal accident rates of 2018 model year vehicles versus 2007 model year vehicles in 2019.

2007 is also a good model year to use for comparison, as US vehicle sales were approximately 16 million that year, not too far shy of the 17.2 million sold in 2018. Of course, we can expect that nearly all 2018 vehicles were in operation in 2019, whereas many 2007 vehicles were no longer in use by that time.

Passenger vehicles involved in fatal crashes in 2019:

| All model years | 39,412 |

| 2018 | 1,909 |

| 2007 | 2,165 |

And yet, we see that despite having fewer vehicles sold in 2007, and even fewer in active operation by the time 2019 rolled around, we see that they’re involved in 13.4% more fatal accidents than 2018 models were. This supports the idea that newer vehicles are generally safer than older ones. This intuitively makes sense, due to the increased risk of mechanical failure, the increased risk caused by deteriorated brakes and tires, and in consideration of the fact that safety features (and safety regulations) have improved significantly over the past 10-20 years.

Determining exactly how much of that 479,000 versus 1.79 million difference can be explained by this, we’d need to know more about the exact distribution of vehicle model years on the road in 2019, and the various accident rates of each. However, to get a rough idea of how sizable this effect could be, we can calculate some estimates:

If all 16 million original 2007 vehicles remained on the road, that is a fatal accident rate of 1 in 7,390 vehicles. For the 2018 vehicles, that rate was 1 in 9,009. That’s about a 22% increase in accident rate for the 2007 vehicles over 2018. However, we know that a lot of those 2007 vehicles were no longer in operation, whereas nearly all of the 2018 vehicles still were. I couldn’t find a great source for average rate of vehicle decommissioning, except some figures of around 5% per year. If we take a really simple 5% reduction from 16 million vehicles over say 10 years, you’re left with around 9.6 million. That works out to a rate of 1 in 4,434, which is a more than 100% increase (i.e. 2x) the 2018 rate.

That alone would bring up the NHTSA 494,000 number to roughly 1 million. Of course, this is a super rough estimate. If older vehicles (e.g. 2002 models) have even higher accident rates, or if fewer of those older vehicles were on the roads in 2019 than I estimated, then this could account for even more of the difference. The point here is not to come up with an exact figure, but instead to illustrate that the sizable disparity (273%, or 3.73x) in accident rate for the NHTSA “average” accident rate versus Tesla’s number is likely the result of a range of compounding factors which must be considered before attempting to draw any conclusions about what these numbers mean.

Takeaway: It would be a more fair and valuable to compare Tesla vehicles accident rates with “average” vehicles from the same model year, or at least the same range of model years.

Alternate Explanation #2: More expensive vehicles are safer

As of the end of 2019, Tesla’s cumulative sales in the US were approximately:

300,000 Model 3

158,000 Model S

85,000 Model X

These vehicles are considerably more expensive than the average passenger vehicle. This affects both the equipment and the population of owners/drivers substantially. Tesla owners are more likely to be older, more experienced drivers. They’re like to be careful with their expensive purchases. They’re more likely to live in urban areas and drive more on city streets and highways, rather than rural/country roads.

So how about we try comparing the 2018 Tesla Model S crash rates to competitive Audi models? I chose Audi in particular as they tend to appeal to the same tech-savvy demographic as Tesla. In 2018, Audi reportedly sold 7,937 A7 class and A8 class vehicles (this includes the S and RS variants). The same year, Tesla reportedly sold 25,745 Model S cars. In 2019, only a single fatal accident was reported involving those Audi models from the 2018 model year. For the Tesla Model S, there were three. Given that there were approximately three times as many 2018 Model S vehicles on the road, this suggests these vehicles have approximately equal likelihood of being involved in a fatal accident.

Admittedly, these numbers are very small. I repeated this exercise comparing the 2018 Model 3 and the 2018 Audi A4 + S4. The former were involved in 11 accidents, the latter in 2. There were just over four times as many Model 3s sold in 2018 as there were Audi A4 class vehicles. This shows us a lower rate of fatal accidents for the A4/S4 vehicles than the Model 3, though again the numbers are small and pretty close. This translates to 1 in 12,707 Model 3s being involved in a fatal accident, and 1 in 17,283 Audi A4s being involved in one.

The 2018 Ford Focus, on the other hand, had a rate of 1 in 5,667. This further supports the notion that more expensive vehicles are less likely to be involved in accidents (or at least fatal ones) versus lower cost models.

Takeaway: More expensive vehicles tend to have lower accident rates than less expensive vehicles. Tesla vehicles are more expensive than “average” vehicles, so it is expected that their accident rate is lower than average.

With more time, I could explore other possible explanations, e.g. that accident rates vary by region in a way negatively correlated with where Tesla sales are concentrated. With more data I could do a much better analysis, but I’m working with what I’ve been able to find publicly accessible and in a reasonably digestible form.

For now I’m content that the data shows Tesla vehicles are among the safest on the road, but that the degree of difference versus the NHTSA “average” number Tesla quoted is overstated. Please note that Tesla never specifically asserts that their vehicles (even without active safety features) are 3-4x less likely to be in accidents. They just publish these two numbers and let many readers make this naïve leap.

Effect of Active Safety Features

So what about hypothesis 2? Do Tesla’s active safety features help prevent accidents?

The data here is both interesting and confounding. The real problem is that we don’t know how this population, with its 2.42M miles between accidents, differs from the baseline Tesla population with their rate of 1.79 million miles between accidents. Is the latter wholly or predominantly made up of older Tesla models that lack these active safety features? Or is it entirely or predominantly vehicles where the driver has explicitly disabled these features? How many Tesla owners do disable some or all of these features? How are vehicles with only some of the features enabled counted?

At face value, these data points suggest that Tesla’s active safety features do prevent accidents. I believe this is true, but I wish we had better data to really understand the difference they make. As it stands, this is a positive indicator, but it leaves too many open questions to be a slam dunk, and that’s unfortunate. That said, even if the no-active-safety-features group is mostly older Tesla vehicles, they’re still relatively new vehicles in a similar price range purchased by similar drivers, so overall it’s probably reasonable to feel good about the impact these features are having.

Another challenge here is that we don’t have any other data to compare it to. We don’t know how many people disable active safety features in competitive cars, nor how many accidents occurred in that configuration. That would certainly be interesting to look at – for example, to see if Tesla’s active safety features are more (or less) effective than those of competitors.

I’m not going to spend any more time on hypothesis #2 though, as I think it’s probably the least contentious.

The big question: Autopilot’s impact

Which brings us to hypothesis 3 (a/b/c).

Tesla’s report of 4.59 million miles between accidents for vehicles with Autopilot engaged certainly sounds impressive. The immediately obvious point of comparison here is to their reported number for Tesla vehicles with active safety features enabled (but not using Autopilot). Looking at that, 4.59M versus 2.42M seems like a very compelling increase in safety. So can we conclude that hypothesis 3a is true? Well, unfortunately no.

Let me be clear: it may be true. If it is true, this data makes sense. But we can’t conclude that it is true because there are other possible explanations for this measurement. In fact, there are some fairly obvious explanations which likely account for at least some of this difference (and possibly most or all of it). To understand why this is the case, let’s look at what we’re comparing:

| Miles on Autopilot | Miles not on Autopilot (but with active safety features) | |

| Vehicle make & model | Tesla Model 3, Y, S, and X | Tesla Model 3, Y, S, and X |

| Vehicle model year | ~2016 and newer | ~2016 and newer? |

| Has drivers who ever use Autopilot | Yes | Yes |

| Has drivers who never use Autopilot | No | Yes |

| % of miles which are on highways | Probably >95% | Probably far less than 95% |

| % of miles in poor weather | Probably smaller | Probably higher |

| % of miles in snow | Probably negligible | Probably higher |

| Countries/region represented | Only those with AP available, more likely those where it isn’t limited by local regulations. (Unless all the data is US only) | All Tesla markets (unless all the data is US only) |

Just as with the other hypotheses above, there’s no perfect way to test the hypothesis #3 variants. And unfortunately, the data made available just isn’t detailed enough to draw any significant conclusions. It supports the idea that AP is at least not obviously unsafe, but the case for it being a net safety win is lacking.

To illustrate further why the 4.59M number and the 2.42M number are not directly comparable, let’s take one more look at some NHTSA FARS data.

Fatal accidents involving a 2016-2020 Tesla (in 2019)

| Interstate, freeway, or expressway | 8 |

| Other road types | 27 |

Most accidents, including for Teslas, don’t happen on interstates. In fact, it’s likely that this difference is even more drastic for non-fatal accidents (as most of the highest speed accidents likely happen on interstates). This supports the idea that we should expect Autopilot use to correlate highly with driving conditions which are associated with fewer accidents. This is correlation, of course, and not causation. That’s the big thing we’re missing, and unfortunately we aren’t going to get it with the data available today.

This hypothesis could be better tested with better data. For example, Tesla could provide this same data filtered by road type, perhaps even filtering to specifically roadways where their Navigate On Autopilot mode is supported – as these are likely to be the same roadways where most Autopilot use happens anyway (and best matches their description of where Autopilot is intended to be used – e.g. divided highways). My expectation is that if you did this analysis, you’d find that the numbers would be far closer – possibly even too close to make a strong case for hypothesis 3a. On the other hand, I think it could help make a much better case for hypothesis 3b – that Autopilot is no less safe than driving on the same roads in a Tesla with the Autopilot-based active safety features (which we can reasonably say is among the safest ways to be driving).

With more data and a bit more work you (or they) could study specific routes, and compare AP-traversals versus non-AP-traversals. You could conduct comparisons focused on specific cities or regions, and see if the AP miles are consistently safer than the non-AP ones. Tesla is in the best position to conduct this research, given the immense amount of high-quality and self-consistent data they have at their disposal. They could conduct this research themselves, or provide a drop of the data to a third-party and commission a detailed study. I’d even go so far as to guess that Tesla will do one of those things at some point – perhaps once they’re more confident in an unambiguous, conclusive, positive result.

If you follow me on Twitter, you may have noticed that I’ve taken a keen interest in the topic of autonomous driving, as well as Tesla’s Autopilot technology and the company more generally. Twitter is rarely an ideal medium for conversation of any length, depth, or nuance – so I thought it might be time for me to revive the ol’ blog and to write about Autopilot. In particular, some things I’ll try to cover include: What is Tesla’s Autopilot? How does it work? Is it safe? How crazy are Elon Musk’s claims about “full self-driving” and “robotaxis”, and what do I think might happen over the next few years?

I wrote most of these entries back in February while on an airplane, but never got around to posting them. I’ll insert a few updates here and there but otherwise this post is pretty much as written at that time.

Disclaimer: I’m an enthusiastic Tesla owner and I own a small chunk of Tesla stock. However, I strive to be objective about the company, and I’ll let you judge my success at that for yourself.

What is Autopilot?

Autopilot is the name Tesla gives to its suite of Advanced Driver-Assistance Systems available today on their vehicles. Rather than having a fixed definition or referring to one specific feature, Autopilot refers generally to the overall hardware + software system, and its capabilities have evolved over time. The capabilities offered by the system today include specific features such as Traffic-Aware Cruise Control, AutoSteer (aka Lane Keep Assist, plus Automatic Lane Changes), Navigate On AutoPilot (automatic navigation-based highway driving), and Smart Summon.

Update: New functionality added since I originally wrote this piece includes “Traffic Light and Stop Sign control” and some recent improvements to speed limit detection.

Traffic-Aware Cruise Control

The most basic capability AutoPilot offers is Traffic-Aware Cruise Control, or TACC. More generally, this is a form of Adaptive Cruise Control. Where regular cruise control simply maintains a set throttle or speed until adjusted or disengaged by the driver, ACC systems measure the speed of a vehicle (or vehicles) ahead and use this to dynamically adjust the cruise control setting.

The earliest implementations of this concept have been around for almost three decades now. The earliest versions used lidar sensors (laser-based range finding) and would only adjust the throttle – they would not apply the brakes. These were very niche offerings, some of which were limited to the Japanese market. In 1999, companies like Cadillac and Mercedes began offering their own systems as high-end options on some of their luxury cars, and their implementations were based solely on a front-facing radar. These gradually evolved over subsequent years, but remained relatively rare in the market. These were squarely positioned as convenience features, primarily designed to remove the need for small throttle adjustments at highway speeds. Most systems couldn’t stop the car, and they were not intended or able to avoid accidents.

Later versions of these systems would gain additional abilities, including the ability for some systems to stop the car, or even to stop and then resume motion in traffic jams. Eventually these systems began to offer forward collision warning and emergency braking capabilities – features which have become fairly ubiquitous in recent years.

Tesla’s TACC mode is similar in concept and implementation. It is, however, one of the more advanced (if not the most advanced) system of this kind available today. It uses both radar and vision to track multiple vehicles, including vehicles ahead of the one you’re following (partly by bouncing radar underneath the car directly in front of you), as well as vehicles in adjacent lanes. Today’s version of the AutoPilot software will even predict “cut-ins” (e.g. a car changing into your lane in front of you), regardless of whether the car signals its lane change. It will also adjust its speed automatically based on other factors including its knowledge of speed limits via its map data, the curvature of the road, and the speed of traffic in adjacent lanes. This doesn’t eliminate all need to adjust the chosen speed manually, but it does cover a lot of the most common cases.

However, as I’ll discuss in more detail later, it is not able to do all of these things perfectly, and it is not able to stop for all obstacles in the road. For example, cut-in detection will sometimes not notice a car merging in as soon as you’d probably like, and if you come around a curve at significant speed, it may not notice some stopped traffic ahead of you until the last second (or in rare cases, at all). Tesla is extremely clear about this in the manual, and in the user interface for the system, and it is important for any driver using this (or any Adaptive Cruise Control system) to remember that they’re ultimately responsible for the operation of their vehicle.

Tesla’s owner’s manuals say: “Traffic-Aware Cruise Control is primarily intended for driving on dry, straight roads such as highways and freeways. It should not be used on city streets.” However, like standard cruise control found in most cars, where and when it is enabled is up to the driver. In fact, Tesla’s cars do not offer a non-traffic-aware cruise control feature – AutoPilot’s TACC is the only cruise control in a Tesla.

AutoSteer

AutoPilot’s next more advanced mode of operation is called AutoSteer. AutoSteer refers to the ability of the system to track the road and lane markings, and to steer the vehicle to stay within them. AutoSteer cannot be enabled without TACC, though the driver can temporarily override speed and acceleration using the accelerator pedal without disengaging AutoSteer.

Several car manufacturers offer various Lane Keep Assist systems in cars you can buy today. The exact functionality and implementations vary a good deal. Many operate only at specific speeds, or where lane markings are pristine. In my experience some barely seem to work at all, to the point where I believe they are at best useless and at worst dangerous. Others are quite competent, and the quirks in their behavior range from unpleasant (e.g. “bouncing” between sides of the lane) to subjective matters of preference (e.g. strict adherence to the center of the lane).

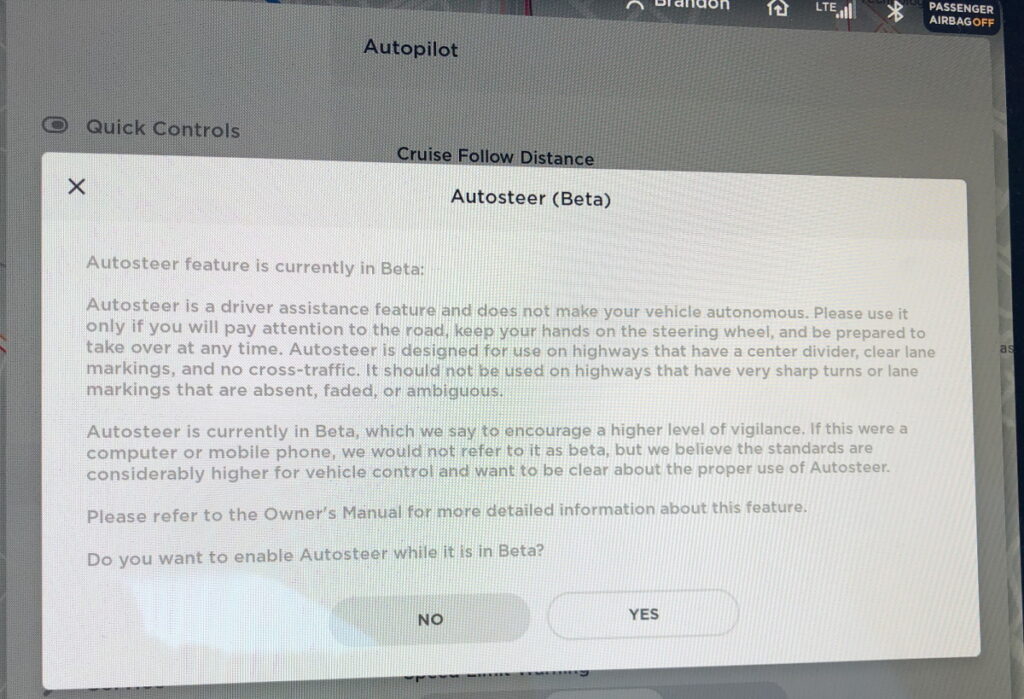

Tesla warns drivers about AutoSteer’s limitations in both in the owner’s manual and in a screen you must accept before enabling the AutoSteer feature in the car’s Settings app. This must be done while parked, and separately for each driver profile. Specifically, they say:

In practice, AutoSteer can be enabled on most roads, so long as AutoPilot can identify the boundaries of the lane you’re in (which it can do surprisingly well even with little in the way of markings). If you are not on a supported highway, however, it will limit the car’s maximum speed while on AutoSteer – I believe to 5MPH over the known speed limit for the road, or 45MPH for places where the speed limit is not known. However, it’s really only useful for highway operation, and in particular it is best suited to divided highways with no cross-traffic (e.g. with on-ramps and off-ramps instead), just as Tesla says. Any use outside of those conditions requires extra vigilance from the driver.

AutoSteer is in my experience quite adept at identifying lane boundaries even with faded or absent lane markings, and will use road edges and other indicators to figure out where it should go. It’s also very impressive at tracking the lane in all manner of weather conditions where I’ve used it. However, it is not perfect. Its behavior when lanes merge or get extra wide can be off-putting (e.g. in some cases it will try to move to the center when really it should stay to one side while the lane shrinks back to normal size).

In rare cases where there are multiple sets of lane lines (e.g. where old sets are visible after new ones have been put down), it can become very confused. Though to be fair, in many of those places it’s easy for human drivers to get confused too. When you hit this situation, it will make a loud alert sound and flash a big warning on the screen telling you to take over immediately. Generally it will still follow the correct path during this time, though the wheel may twitch back and forth a bit until you take control.

While engaged, AutoPilot will continually check to determine if your hands are on the steering wheel. The way it does this is by measuring torque (turning force) on the steering wheel itself, or interaction with any of the steering wheel buttons or scroll wheels. So if your hands are just gently resting on it while driving straight, it can’t tell if they’re there or not. Because of this, the system will periodically “nag” you to apply some pressure to the steering wheel.

The nag behavior is very dynamic, and I think quite clever. If you’re going straight and the system is confident about what’s ahead, it will go 30 seconds or more without detecting any torque before it nags you – the maximum length of time varies depending on speed. However, if the car turns the wheel at all, it will immediately know if your hands are there or not (based on whether there’s any resistance) and will immediately nag you if they are not. Further, it will nag you immediately any time it becomes at all uncertain about the road markings or what lies ahead. In general, I’m able to rest my left hand on the wheel in a way where I do not get nagged very often. When I do, it’s just a matter of applying a small amount of pressure momentarily and the message immediately disappears.

If you fail to respond to the nag message, it will quickly get more aggressive about getting your attention, first flashing the background of the whole “instrument cluster” blue, then making urgent audible noises, up until a point where it will begin to slow the car to a stop and activate the hazard lights. I’ve never let it get that far, but I’ve seen videos showing what happens if you don’t respond. This seems sensible, and I think it is actually something that could be valuable in all cars, regardless of AutoSteer type functionality. Fatigued drivers all too often fall asleep at the wheel with no automation or with regular cruise control enabled, or could be suddenly incapacitated by an urgent health issue. Having any car able to safely come to a stop in this situations can save lives.

AutoSteer also supports Automatic Lane Changes. While AutoSteer is enabled, you can simply activate the turn signal in the direction you’d like to change lanes, and the AutoPilot system will execute the change. If the lane is clear, it will happen quite immediately. If it is not, the driver visualization screen will show the lane boundary and any cars in the way in red. AutoPilot will attempt to adjust speed to find an opening, and move over once it finds a spot. In some cases, it can be helpful to apply the accelerator to encourage AP to move in front of a car rather than slowing down and trying to move over behind it. In these situations, AutoPilot does what I think is an impressive job of melding “(hu)man and machine” operation, and what could be a complicated UX nightmare actually seems to be handled very seamlessly in my experience.

Navigate On AutoPilot

The most advanced mode of operation AutoPilot offers today is called “Navigate on AutoPilot” (or “NOA”). This is a pretty seamless addition on top of AutoSteer while a navigation route has been selected. When enabled, and on supported highways in supported conditions, AutoPilot will automatically take exits and interchanges to follow your chosen navigation route. It will also recommend or even automatically execute (if enabled in a settings page) lane changes to overtake other cars, move back out of the passing lane, and to follow the route (i.e. to be in the correct lane for an upcoming exit).

NOA is really good at some things and pretty good at others, with some significant caveats. Its performance and usefulness is very dependent on having accurate map data and GPS signal. It also will deactivate itself or temporarily limit its functionality if it detects certain conditions – including particularly poor weather, or some construction zones. In these cases, it will deactivate the Navigate On AutoPilot functionality, but revert to the normal AutoSteer behavior. These situations are communicated to the driver via recognizable sounds and a message about what happened, but there is no urgent alert or panic from the system as it will continue driving in the current lane, subject to normal AutoSteer behaviors and limitations. The system will also automatically resume NOA functions if/when the condition passes.

In the default configuration, NOA will execute maneuvers to take off-ramps without any kind of intervention, but it will only execute lane changes with a confirmation from the driver. It will make a sound, vibrate the steering wheel, and show an indicator that a lane change is suggested, and the driver can confirm it either by pressing the turn signal stalk in the corresponding direction, or by pressing the same button or stalk that engages AutoSteer (varies by model). It will then activate the turn signal, execute the lane change (same as if you used the Automatic Lane Change feature in regular AutoSteer mode), and then deactivate the turn signal.

If, however, you change the advanced setting to disable lane change confirmations (as I have), the car will behave differently. When NOA wants to make a lane change, it will make a sound, vibrate the steering wheel, and display a message on the screen just like in the default configuration – but it will then also turn on the corresponding turn signal. At this time it will check for torque on the steering wheel indicating that your hands are there and (roughly) that you’re paying attention. If no torque is detected, it will jump to the second level of “nag” mode immediately, and it will not change lanes until your hands are detected. Even if pressure on the steering wheel is detected right away, it will wait a minimum amount of time (5 or 6 seconds?) before beginning the lane change – what feels like an eternity compared to the usual Automatic Lane Changes initiated by the driver. Otherwise, the behavior is the same. Some consider the need to apply torque on the wheel to be a sort of “confirmation” despite Tesla calling this a “no confirmation” mode, and I think they’re mostly right in practice, but Tesla is right technically. One key distinction is that if you have your hand resting with enough weight on the wheel to be detected, it doesn’t matter which direction that weight is applying torque on the wheel – it can be the opposite direction of the lane change. It is, however, pretty clear that the system is being extremely cautious and wanting to give you ample time to disagree with what it’s about to do and stop it before it starts.

In some ways, that makes sense and is probably necessary for the current version of the software. On the other hand, it’s kind of disappointing and actually makes this feature less valuable. The delay in executing the lane change is often enough that the gap it could’ve moved into is gone – particularly around Seattle where drivers see turn signals as a “please come block me” message. Other times it works out fine but just makes you look like a timid driver. In the worst case, someone may think they’ve given you enough time, and decide that you must not have wanted to change lanes after all. Then you start moving just as they decided to pass, so then AP aborts the maneuver, and basically everybody gets annoyed.

In my experience, NOA is very good at taking exits and interchanges, and generally pretty good at overtaking cars. The main problems I have with it fall into the following buckets:

- Relatively minor annoyances like wanting to get over to the rightmost lane unnecessarily early before an exit. Most often I’d prefer it to wait until after we’ve passed the last on-ramp where cars are merging in before deciding to move over for an exit, assuming I’m in a middle lane or passing slower traffic. It’s also pretty inconsistent about this – sometimes wanting to get over miles ahead of an exit, and sometimes not.

- Unexpectedly aborted lane changes. These are cases where the car starts executing a lane change in its normal fashion but then panics and abruptly swerves back to the center of the original lane. In theory this shouldn’t be a safety concern as it hasn’t fully left the original lane and any driver has the right to abort a lane change for various reasons (and this happens from time to time). But the abrupt manner in which it does it is off-putting, and likely disconcerting to other drivers. This used to be particularly problematic for me on the 520 bridge for some reason, and I suspect due to readings from the ultrasonic sensors picking up either the guard rails or the raised lane markers on the ground. I haven’t hit this lately or at least not regularly, so it may be something they’ve largely fixed. But that’s hard for me to say with any certainty as changes to the main trouble spots on my commute and to my usage patterns may be partly responsible.

Update: It’s been about 7 months since I originally wrote that down. Since then, I haven’t observed a single case of this behavior. A couple of times I’ve now seen the system hesitate for an instant halfway through a lane change, but then continue successfully rather than jerking back to the original lane. It seems like they may have fixed this issue or at least made it a lot less common or jarring. - Out-of-date maps. Due to construction on the west side of the 520 floating bridge, the navigation data used for routing and NOA functionality no longer matches reality. To its credit, I haven’t seen the car do or try to do anything unsafe due to this, however it does result in the navigation system suddenly deciding I’m on a different road (not the highway) and rerouting me, and this definitely confuses the NOA system. It also triggers AutoPilot to reduce its speed because it suddenly thinks it’s on an off-ramp (accidentally matching the posted temporary speed limit due to ongoing construction, but not at all matching the speeds everyone drives there). Because of this, I now disable NOA during this section of my drive, and resume it afterward.

- Failures to take exits, where you miss your exit because NOA got confused and passed it. This has been extremely rare but I think it’s happened once or twice. Just as often, I have thought it was going the wrong way and made a last-minute change myself, only to realize I was mistaken and NOA was correct. So I guess we’re about even on that count.

- The really confused stuff. I can only think of one example, but I did once activate NOA very close to my exit for work in some heavy rain, actually unsure if it would even enable or give me the “poor weather” message. However, it did enable, but then proceeded to get confused and apparently think that I was driving on the shoulder or a non-existent lane that was ending, and not the rightmost lane where I was and needed to be. It then wanted very badly to change lanes to the left. I of course could override it and did so, but it was one of the more disconcerting experiences I’ve had.

Smart Summon

Smart Summon is really the closest thing to autonomous driving offered by Tesla today, as it enables the car to drive without a driver being present in the vehicle. Technically speaking though, Tesla considers you to be operating the vehicle remotely – and responsibility still falls on you the “driver” operating the vehicle remotely via the app. This can make it pretty terrifying, and is why I suspect usage of it is pretty limited.

The basic idea is that you launch the smartphone app, select Summon -> Smart Summon, and then you either use the “come to me” button or you place a pin on a satellite view map of where you are. The app requires you be within a certain range of the car, and that the car is not on a public road. It is intended only for use in parking lots or private driveways.

The app will show you a line depicting the planned path the vehicle will try to take, and sometimes you can futz around with the pin to adjust its plan before it starts. Its actual path will vary depending on what it encounters along the way, but it’s good to look at the planned path and make sure it is sane before you start.

When you’re ready, you press down on the “summon” button, and hold your finger down. The car will light up and if the coast is clear, it will quickly begin moving (usually backing out of its parking spot). I think parking head-in is preferable if you’re going to use Smart Summon, as the car has a better view of the ground behind it – there’s a blind spot just in front of the bumper in the forward direction, where it only has ultrasonic sensors to detect potential obstacles. When backed into a spot, I believe the car will actually try to reverse a little bit before going forward (if it has clearance behind), to give itself a better view of what’s immediately in front of it before proceeding.

The car will then leave the parking spot and make its way to you or the pin you placed on the map. In general it will use its turn signal when making turns (even when a human driver probably wouldn’t), and will stop at “intersections” to make sure they’re clear before proceeding. It seems to try to stay to the right of the road/aisle, but it doesn’t always get this quite right. It will sometimes stop or slow down for no apparent reason, and will sometimes stop more abruptly than necessary when it sees another car move near its path. It will also sometimes wait longer than necessary once the path is clear. In general, these are cases where it’s overly cautious, and frankly I’m okay with that at this point.

I’ve tried the feature out many times, and in a few cases it’s been useful (e.g. kept me dry during some rain), but mostly it’s just neat. I’d estimate my success rate with it as:

~50% of the time it basically exactly what I want

~30% of the time I get scared and give up, despite it not doing anything wrong

~20% of the time it gets confused and starts going the wrong way, at which point I stop it and make an embarrassing dash to “rescue” it and not be in anybody’s way

Most of my usage has been in Microsoft parking lots (before we started working from home in February), usually in the evening when there aren’t many pedestrians or cars around. For that 30% of time where I give up, it’s usually because my vantage point makes it hard to tell how close it is getting to a parked car or curb, or I see people or fast-moving cars coming toward it and I get too nervous. I’ve never seen it take any action that would result in injury or damage, but it’s definitely terrifying to have your (very expensive) car moving on its own even remotely near to a wall or another car.

For the most part, my concerns while using it are that I’ll annoy or inconvenience others. Fortunately, the few times where I thought someone might be annoyed or alarmed, they actually just wanted to come tell me how amazed they were about what they’d just seen. I suspect some of this is a matter of where I’ve been while using it, though.

As with other AutoPilot features, I expect this to improve over time and hope that eventually it will become something that can be more easily relied upon and less of an anxiety-inducing party trick.

Traffic Light and Stop Sign Control

The most recent addition to the AutoPilot suite is called “Traffic Light and Stop Sign Control”. This is the latest “beta within a beta” feature to be added for Tesla owners who have purchased the most advanced “Full Self Driving” package (more on that name coming in another post) with the latest AutoPilot hardware.

When enabled, this feature allows the car to stop itself for traffic lights and stop signs in both the TACC and AutoSteer modes. When first released, this feature meant the car would stop at all traffic lights, regardless of what color/state they were in. So if you approached a green light, the car would tell you it’s about to start slowing down, and then you’d have to either hit press the stalk or briefly tap the accelerator to tell it you wanted it to proceed. If you did this and then the light changed yellow or red, it would again begin to stop. When it detected a light as red, it would not proceed unless you manually took over accelerating.

In an update a month or so later, this functionality was updated such that the car will now proceed through a green light automatically if it sees another car in your lane go through it. Otherwise the behavior is as before. I think this approach makes a lot of sense, and is a great example of starting with a conservative and safety-biased behavior which enables them to measure how often the driver agrees with the system, then iterate until their safety goals are met, before enabling more complete automation of cases where higher risk is inherently present.

While it may sound like this requires a lot of “baby sitting”, I’ve found it really works quite well. Most of my usage of this has been in the TACC mode, as AutoSteer isn’t currently useful or appropriate for the kinds of roads where this is useful. It does need more work, and I’d only recommend it to “advanced” AutoPilot users who want to try the latest and greatest but who will be very actively engaged with the system, and take the necessary care in its operation (plus being willing to put up with its foibles).

As with AutoPilot in general, this is not a “set it and forget it” system. Use of this, even with just TACC, means engaging and disengaging it as needed throughout your drive. When it stops at stop signs, for example, you often will need to disable it so that you can inch forward a bit to see what’s coming before you proceed. It doesn’t (yet) do anything like that on its own. It stops at the stop line, and when you tell it to go it will go. It’s also not really ideal for turns, even though it does generally maintain a lower speed and/or match the speed of a car turning in front of you – it just isn’t very natural yet and works best if you disable it, take the turn yourself, and then re-enable it.

However, on long county highways and country roads, like the one we took to our campsite for Labor Day weekend, it makes for a nice upgrade over “plain” cruise control or even “classic” TACC. You can go for very long, straight stretches without having to touch the accelerator. Just minding the steering and paying attention in case you need to brake for something unexpected.

It will be interesting to see what Tesla does next with this. They say they’re working on supporting turns, which seems like it will take things to a new level if they can make it work well. This is clearly a very complicated thing to do which they need to get absolutely right in order for it to be used safely. I wouldn’t be surprised if, just like with Traffic Light & Stop Sign Control, they start with the simplest case. Requiring the driver to confirm that it’s okay to proceed, and perhaps only supporting certain intersections, right turns only, or some other conservative limitation like that. Then over time they can get progressively more sophisticated.

More to come

I’ve already written up a great deal about the topic of safety, including some analysis of the data that’s been made available by Tesla and others. I’ll do a pass through that soon and get it posted here as Part 2.

Return to the Empire

It’s hard to believe that three years have passed since I was here posting my farewell to Microsoft. In early 2013 I ventured out into the big world of startups, and life has been a bit of a rollercoaster ever since. First I took on some fun projects of my own. I built a unique news app + service called Newseen, a little game called Cattergories, and then made Tweetium – a Twitter client that became the most popular third-party client in the Windows Store (and has been on the Top Paid list for over a year). After a year on my own, I joined a very early stage startup, where I spent more than a year and a half building the Zealyst web+mobile app.

Over the last three years I tried a lot of new things. I tried some things that worked out well. I tried a bunch of things that didn’t work at all, but which I would like to try again someday and do better. I also tried at least a few that I will do my best to avoid at all costs. I consider all of these valuable experiences. I’ve learned a lot, and found that there’s always more to learn.

I learned that building a business is hard, even with a great idea and a passionate, creative team (and even if you have some great customers who want what you’re offering). I learned that raising money is hard. I saw firsthand some of the unique challenges faced by women founders like ours, and many more challenges which I’m sure most startups face. I learned that stretching yourself too thin can take a real toll on mental and physical health. I learned the excitement of making a big sale, the thrill of deploying a brand new homegrown service to real users for the first time, the anxiety of patching problems in production, and the heartbreak of cultivating business relationships for months only to see them fall through due to reasons beyond your control. I learned the importance of having a strong, caring, supportive team – I am eternally grateful to have found that. And I am very, very proud of what we built together.

It’s easy to look back and focus on things I could’ve or should’ve done but didn’t (e.g. traveled more, ported Newseen to iOS and Android, spent more hours on Zealyst, been more or less involved in business strategy or fundraising, moved to Hawaii, etc). On the other hand, these last years have brought me new friends, new skills, new perspectives, and I think new self-awareness. While I relished the freedom, potential, and challenge that came with building a new business, I also came to long for the impact that I had when I worked on software used by a billion people. Further, I’ve missed being part of a fully-funded team of developers (and Program Managers!). They say you never fully appreciate what you have until it’s gone. PM friends, consider yourselves fully appreciated 😉

Over the last few months, we’ve been winding down Zealyst operations as the company (at least in its current form*) comes to a close. This has afforded me some time to do a little bit of traveling, relaxing, soul searching, and exploration of what I might want to do next. I’m incredibly grateful to be in a position where great options are plentiful. After a lot of consideration, I decided that the right next step for me is to take what I’ve learned these last few years and apply it in a place where I know my passion and level of impact are strongest.

As you’ve probably figured out by now, I am excited to announce that I have accepted an offer to return to Microsoft. Starting next week, I will once again be a developer on the Windows Shell team. Over the past couple of months I’ve discovered a Microsoft and a Windows team which is both familiar and yet substantially reinvented. They’ve begun the incredible task of changing Windows into a new kind of OS-as-a-service, which is a change to the development process and culture as much as to the code and how it’s delivered. I am very impressed with the progress they’ve made over the last couple of years, and even more impressed with the ambition to bring the best parts of the mobile + cloud delivery and user engagement models to all Windows devices. I can’t go into specifics at this time, but suffice to say that I believe the team I’m joining is crucial to this effort, and that it’s a compelling time and place to apply my strengths and experience.

I’d also like to thank everyone who’s given me advice, offered a variety of compelling opportunities, or welcomed me back into the Microsoft fold. I am immensely grateful for all of this. To all my Microsoft friends and colleagues, I’ll be seeing you around campus soon!

There is no mobile ecosystem

This morning I saw a snippet of a new blog post by Benedict Evans (of a16z) float by on Twitter that made me scratch my head. It posited that while the iPad Pro and Surface Pro “look similar”, the former is part of an innovative new “mobile ecosystem” that is on the rise, while the latter is the dying breathe of a withering PC ecosystem. I called BS.

Why? Because there is no mobile ecosystem.

The PC ecosystem is real. For decades now it has had Microsoft and Intel at its center. Orbiting them were Windows software developers, IHVs, and PC OEMs – the latter fighting over customers and spewing out dollars, most of which were sucked in by Microsoft and Intel’s immense gravity. In the early 2000s this system was growing rampantly, and was stable enough to weather storms like a string of much-publicized security problems, and the drunken stumblings misteps of the Longhorn/Vista years.

However, as time went on, and complacency set in, things started to wobble a bit. As innovation in this system essentially stopped, demand for new PCs started to slow, and OEMs started driving down prices and building more and more “disposable” machines. Then a disastrous thing happened. Apple introduced the iPhone.

Almost over night, software developers (who had become bored and disillusioned by all the unrealized promises of Longhorn) turned their attention to this entirely new category of devices. Apple didn’t even have a public native app platform, so developers created the first mobile web apps and frameworks, and then began hacking and reverse-engineering Apple’s internal API set. Apple didn’t provide an app store, so developers built their own. Thus began the emergence of the Apple ecosystem.

Apple saw the fragility in the PC ecosystem and wanted no part of it. They already built the OS and the hardware, and soon decided they’d like to control even more. They even figured out how to exert unprecedented control over the cell carriers. However, that first iPhone showed that independent developers were not something they could ignore (as they did with the iPod, for example). So what did they do? They offered those developers a tight leash from the very beginning, with an institutionalized flow of cash to the mothership included for good measure. You would only sell apps and content through the App Store. You would only develop your apps using a Mac. You most definitely would not build anything that Apple felt threatened by, or use a technology they deemed inadequate. And you would give Apple a third of your income and thank them for the privilege.

The mobile smartphone market is clearly critical to the Apple ecosystem. It’s where they first got traction, because they basically invented it and had no (meaningful) competition early on. But even with the iPad included, mobile is the beginning, not the end, of the Apple ecosystem. They’ve leveraged it (in a very 90s Microsoft way) to get the vast majority of developers onto Macs. This is really important. It creates a very difficult-to-break feedback loop. There’s significant resistance to breaking developers away from the Apple ecosystem because the Apple ecosystem is where devs *live*, even if they try an Android phone, for example.

Apple is pushing to extend this thriving ecosystem into new markets like TVs/set-tops and wearables. The iPhone is the center of gravity in Apple’s ecosystem, and their other products revolve around it. The Apple Watch requires one. The Apple TV is meant to work with one. Macs are required to develop for one. But what Apple has never had any desire to participate in is a mobile ecosystem. Perish the thought.

Now that bit from Benedict’s post that caught my eye was about the iPad Pro. So where does that fit in? I don’t think the iPad Pro is a product Apple came up with to expand their ecosystem. I think it’s a bulwark against the Surface (and similar modern tablets/PCs) encroaching on their Mac (and to a lesser extent, iPad) business. It might get traction in some nice niches/verticals, but I think its primary purpose is defending their ecosystem, not expanding it.

Google isn’t cultivating a mobile ecosystem either. They’re cultivating a Google ecosystem. The mobile part of that ecosystem is also strong for two reasons:

A) Price. Or in more words: They played the Windows game of old and let OEMs race to the bottom, flooding the market with cheap Android handsets. Except they didn’t care about extracting profit because at that time phones were not an important part of Google’s ecosystem.

B) Carrier support in the early days from everyone who wasn’t AT&T and were desperate to be in the smartphone space. Apple got too comfortable and took too long to address this, which gave Android a larger in than they might’ve had if Apple had broken carrier exclusivity sooner.

Originally, the center of Google’s ecosystem was search. They had content publishers and advertisers orbiting their search advertising behemoth, and projects like Android were just meant to feed and protect that lucrative system. Times change, though, and so have Google’s priorities. Where they once saw devices as Amazon does (places to offer their goods and services), they seem to be increasingly seeing devices (and Android in particular) as the center the Google ecosystem, with their services orbiting Android the way Apple’s watch is tethered to the iPhone. This is probably less a matter of their desire, and more a response to competitors. Apple, Microsoft, and Amazon are all reorganizing their businesses around a model that puts them at the center of their own ecosystem. Ecosystems which are much more self-sustaining than the PC ecosystem of old.

That brings us to Microsoft. Initially they were either oblivious or in denial as to ecosystem amassing around Apple. Later, they saw Apple raking in cash with their model, and Google “succeeding” with Android using the old Windows/PC model (sans the profit), and said “let’s do both of those things!”. In phone land, the result was a handful of third-party Windows Phone devices that all had identical components. With no ability to make competitive deals with component vendors, and hamstrung waiting for Microsoft to release new device class specifications, none of these OEMs stood a change of competing with Apple or Google’s partners who could do whatever they wanted. They had no userbase to offer to developers, and a severely limited platform built on, no joke, Silverlight. They even wanted to imitate Apple so badly they bragged about leaving out copy-and-paste from the first release of Windows Phone 7. That’s how seriously these people took mobile.

On the PC side, they took a slightly different approach. Being like Apple meant spinning up an in-house hardware unit to build the Surface. At the same time, they also tried the Windows Phone model of building detailed ARM device specifications and “permitting” a few vendors to build virtually identical devices. Oh, and they also tried letting other OEMs roam free on Intel hardware. Cynically I call this the “have your cake, eat it, and sell it back to them too” approach. You could also consider it the “try everything and see what works” approach. Neither conveys a high degree of focus.

To their OEM partners, this was all an experiment, an attempt to establish a “premium” tier and to motivate and inspire those partners to innovate. In reality, whether the original intention or not, this was the beginning of realigning the PC solar system. If this was the plan, it was sort of brilliant. Microsoft saw the PC ecosystem slowly collapsing, and began preparing itself for a new universe where it would need to take on a different role, with Windows at the center of an ecosystem surrounded by hardware of all kinds (including servers in an Azure datacenter). All the while keeping PC OEMs feeding them while Microsoft prepared for their inevitable demise.

Whatever the plan was back in that Windows 8/RT timeframe, the execution, obviously, did not work out so well. The real sin wasn’t the failure to burst into the mobile market, but that in doing so they destabilized the strongest part of their ecosystem at that critical moment. It’s taken years to even begin undoing that damage. In the long run maybe this will turn out to be a growing pain. Or it may turn out to have been a crucial misstep from which they’ll never recover.

In fact, I’d attribute the Windows 8/RT disaster to exactly the kind of thinking that Mr. Evans shared in his post. That’s how you get to the idea that those 1.5bn PCs in use, and the ~0.4 billion a year sold, don’t matter, and that it was “okay” if they all stayed on Windows 7. Because all that matters is some non-existent “mobile ecosystem” that everything needs to immediately be a part of. So why not bet the company on it?

It turns out, however, that mobile didn’t eat the world. PCs (at least in the traditional sense) may not be a growth market, but they are a crucial part of Microsoft’s ecosystem, and will be for some time. Mobile is a growth opportunity for them. A huge one. But succeeding there these past few years was made so much harder by the disarray of their existing ecosystem.

That brings us to today. Microsoft’s ecosystem is showing signs of stabilizing, with the Surface Pro 3 and now Windows 10 providing some much-needed energy and positivity in their PC business. The cost of this is clear: Microsoft has all but entirely exited the mobile market over the last year or two. Whatever little progress they’d made with Nokia and WP 8.1 was left to evaporate while the company fixed PCs. Sure, they have promised two new flagship Lumias before the year comes to a close. But everyone paying attention can see that these are (far too late) stopgaps while they “retrench”. The excitement their new head of hardware had for these at the announcement event was… was… what’s the opposite of “palpable”?

That’s not to say they’re giving up on mobile. Not at all. But it’s going to take more than competitive devices and a competitive platform to make a dent in that market. It’s going to take a Microsoft ecosystem firing on all cylinders, with attractive offerings for customers and developers. Developers are the hardest nut to crack. And they are absolutely not looking for a new mobile platform to support.

So what is Microsoft doing to win developers today? A few things:

- Aggressively courting them with Azure.

- Taking a leadership role in the evolution of the web’s technology and standards.

- Creating the small beachhead that is Visual Studio Code (free open-source code editor for Mac, Linux, and Windows).

Notice I didn’t even mention “building a modern, universal platform”. They’ve done that. But without users or the opportunity to build something new and unique, this means nothing. It’s a prerequisite for success, not a strategy.

What can they do? I look at the problem three ways:

- Bring all your users to the table. They don’t have a meaningful number in mobile. But they have 130+ million on Windows 10 PCs, and it’s a fair bet they’ll march that number of up to a billion over the next few years. Find ways to get these users buying apps, and you’ll attract developers. They know this. They’ve just struggled to figure out how. Windows 10 is trying some new tactics here that they’re going to be dialing up a bit soon, and I hear early indications are that they’re having some success with this (meaning more users are buying apps on from the Win10 store). But just driving users to the store isn’t enough. They need to encourage a PC app renaissance, and I have a bunch of ideas for how to do this (perhaps for another post).

Also, adding in 15 million and growing Xbox One users who love to spend $$ into the mix will not hurt.

- Let developers do something unique. This is what drew developers to the iPhone, and Apple is actually really great at this. HoloLens could be a long-term play for this, but that’s clearly not enough. Kinect might’ve been a big missed opportunity here, and Xbox might still be the key place to pull it off. That’s because letting developers do something unique generally means giving them unique hardware to play with.

- Find developers where they live. Microsoft is really strong at developer tools. Apple is kind of atrocious at them. So what’s the opportunity here for Microsoft? Here’s a bold vision they could adopt:

Make Microsoft’s tools be the best way to build an iOS app.

What could that look like? Here are some more specific ideas:

- Make the Surface Pro/Book the best device for building iOS apps. Make Visual Studio into the best Objective-C and Swift IDE. Make the best emulator experience (newsflash: it doesn’t even need to run iOS!). Provide the best cloud-based build environment (a la PhoneGap Build) running on some Macs in a Microsoft datacenter, to get around Apple’s licensing restrictions.

- Make Windows 10 on a Mac the best iOS development experience. Buy up Parallels, and give developers a VM with Windows 10 and Visual Studio to run on their Mac. Make some awesome plumbing so that VS can connect across the VM host to the iOS emulators and build tools in OS X. Bring the game to Xcode’s home turf and crush it.

- Build a full Visual Studio iOS development experience for OS X.

I really like the second option there, actually. It’s technically doable. Apple would be powerless to stop it. You could make developers love Windows without them having to give up their Mac. Then later you show them why they’d love it even more on a Surface with a touch screen (those phone emulators really light up when you can, you know, touch them).

Is this a plan for cracking the “mobile ecosystem”? No. It’s a plan for attracting developers into the Microsoft ecosystem. Once they’re there, Microsoft will have a way easier time directing their attention to mobile.

My to-do list for the Edge team

I’m very excited about Windows 10’s new default browser, Microsoft Edge. The team over there is killing it when it comes to performance, web standards, and interoperability. It also looks great in the new black theme (though I think the default white/gray one is super drab).

That said, in its current Preview form it is clearly not a finished product. With only a few weeks left until its first official release, I wanted to share my list of things that I feel are missing. So here’s my list of basic browser UI must-haves that are currently missing, as of 7/4/15 with build 10162:

- New Window from the task bar

- First, right-clicking the Edge icon and then selecting the “Microsoft Edge” entry should launch a new window, as it does with virtually every other multi-instance Windows app (including IE).

- It also wouldn’t hurt to have explicit “New window” and “inPrivate” buttons here (IE had the latter, and Chrome has both).

- Save As / Save Target As…

- Currently, file downloads always go to the Downloads folder and always with whatever name the server provided (or the browser calculated). This is unacceptable for oh-so-many situations.

- IE provides (as other browsers do) a context menu option on links to save the target with a different name and in a different location. IE also provided a “Save as” option in the info bar that asked whether you wanted to run or save a file.

- Jump list integration

- Edge currently has no Jump List on the task bar or in Start at all. This should have frequent sites listed for easy access and pinning.

- Back-button history menu

- There’s no drop-down or right-click menu on the Back button with the last N locations. I just hit a common problem where a redirect or JS bug on Audi’s site trapped me from going back. In IE I could right-click on Back and get the list of the last 5 or 10 pages, so I could skip past the trap or broken page to where I wanted to be.

- “Undo close”

- Or some way to get back to an accidentally closed tab. IE had this in the New Tab page. It could go there or in the menu. Right now the best I’ve found is to go History, but it’s actually pretty annoying to do that because History isn’t sorted by when tabs were closed, and if I have a lot of tabs open it can take a few minutes to figure out which history item is the thing I accidentally closed.

- Deleting address bar history

- In IE, if you mistype a URL (say “techmem.com” instead of “Techmeme.com”), or otherwise have something in your address bar auto-complete that you want to clear, you can just arrow down to it and hit the DEL key and it’s gone.

- Maybe this wasn’t discoverable, and maybe a UI affordance for mouse, or a hint, or something should be added, but in the meantime, at least enable the DEL key to work like it did in IE.

- Drag-and-drop