Seattle Municipal Court is a joke

/Begin rant

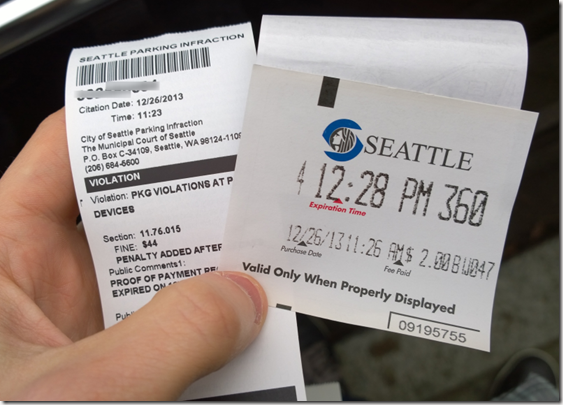

The day after Christmas, I tweeted a picture of a parking ticket I got, along with the parking receipt I was paying for at the same time. Obviously I found this kind of ridiculous and didn’t want to pay it. I tweeted the picture to @SeattlePD who responded and said I should contest it.

I knew that the time it would take would not be worth the $44 I’d save by avoiding the fine. But the principle of the thing drove me nuts. So what did I do? I responded "Not Guilty" and then when I received the hearing date, I mailed back the "contest by mail" form.

Sadly I didn’t keep a copy of the letter I wrote on the form. Here’s a recreation from memory:

On December 26th at around 10:15AM, I parked my vehicle on Wall St. between 5th and 4th avenues. I paid for a little over an hour of parking and place the receipt sticker on the inside of the curbside window as prescribed. As the hour passed, my plans changed and I decided to stay in the neighborhood for lunch. I returned to my car as the parking receipt was expiring to purchase another, as the meter said "4 hours max" and I’d thus far only paid for an hour.

Unfortunately, the same parking meter I had used an hour earlier no longer seemed to be working. It would spend a couple of minutes connecting, and then display an error along the lines of "could not contact bank for approval." After a few tries, I decided to walk to another meter on the same block and try paying there. As I walked I used my phone to confirm on the SDOT website that this was the correct thing to do when a meter was not working properly. The second machine seemed to take longer than usual to authorize the charge, but it did work. New receipt in hand, I started walking back to my car.

As I was walking I noticed a parking officer was standing on a Segway next to my vehicle. After a second I realized there was already a piece of paper on my windshield. I shouted, "Excuse me!" and started jogging toward him, but he apparently didn’t hear me and started riding away toward 5th avenue and around the corner heading north. I ran up the block past my car to the corner, hoping he’d slow or stop to look at cars on that street and I could catch him. But by the time I got around the corner, he was gone.

I returned to my car and placed the new parking receipt next to the original one in the window. I went into the coffee shop I’d been in and wrote a note that said: "You gave me a ticket while I was paying!!!" and left it conspicuously placed on the same window. When I returned from lunch, the ticket and my note were still there.

As you can see from the enclosed parking receipts, I had paid for A) the hour just prior to the ticket, and B) and additional hour beginning at the time the ticket was issued. I believe the digits to the right of the amount paid indicates the meter from which the receipt was printed (W047 versus W049). If you look these up, you’ll find that they are indeed from different meters on this same block.

I do not believe I committed the alleged violation, as I was clearly making an earnest effort to pay for the parking spot (and did indeed pay for it!). Thank you for your time and consideration.

Pretty reasonable, right? I expected that my detailed description of what happened, along with the inclusion of both parking receipts, would make this a pretty simple case to dismiss. At worst, I feared that they would cite some technicality, or maybe challenge my recount of events.

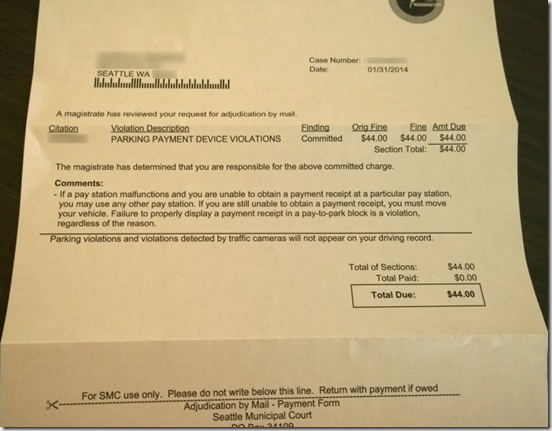

But no. Instead, after two weeks, I finally received the magistrate’s reply:

Here’s what the comment section says:

If a pay station malfunctions and you are unable to obtain a payment receipt at a particular pay station, you may use any other pay station. If you are still unable to obtain a payment receipt, you must move your vehicle. Failure to properly display a payment receipt in a pay-to-park block is a violation, regardless of the reason.

I was appalled. This "magistrate" obviously didn’t even read what I wrote. Worse, the other page of this letter says that I cannot appeal or in any other way ask for accountability regarding this decision. Remember, I included the parking receipts with my letter on their form. I did use a different machine, and I did display the parking receipt. This parking receipt:

(I thought I’d also taken a picture of the first receipt, but apparently did not)

Their response includes absolutely nothing to indicate who this "magistrate" was, or how to report their utter failure to do their job. Presumably, they don’t want such reports. They just want my money, whether the law actually says they should have it or not.

$44 is nothing. I’ve paid that for legitimate parking tickets in the past without complaint (other than grumbling to myself about how high the fines are these days). While I’d prefer the parking officer have given sufficient time after the initial receipt expired, or have looked at the other meters on the block to see if someone was paying at one, I can’t really fault him. He was doing his job, and I was the victim of unfortunate timing.

This "magistrate" however, should be fired. Or at least reprimanded. You can’t be in a position of judicial power and make a judgment based on skimming a defendant’s carefully written testimony. You can’t blatantly disregard material evidence. You can’t copy and paste a boilerplate response which is clearly not applicable to my situation. And then tell me I have no recourse.

Am I overreacting? Probably. But this is my blog so what good is it if I can’t vent when I’m annoyed? And what happens if and when this person is given judicial power over more important matters? Will they continue to be just as careless?

Anyway, that’s my rant. If you happen to know someone in the Seattle Municipal Court system, or on the parking enforcement side of things, who wants to try and make this right, send them my way. Otherwise, I guess I’ll be sending in my $44 tip on $4 of parking.

/End rant

Longtime readers may remember that ages ago I used to write occasional posts with the title “What I Want: <some topic area>.” It’s been awhile, but I’m going to try bringing that back, starting today.

I’ve thought about and wanted this particular thing for a long time, but figured that now is as good a time as any to jot down my thinking for blending the Windows desktop and Metro/Modern/Immersive environments. I guess I’m partly inspired to blog about it because of Joseph Machalani’s post “Fixing Windows 8” he published back in December. I loved his approach of laying out concept and principles, and then showing mockups of how his proposal would work.

I’m not going to spend anywhere near as much time on this post as Joseph clearly did on his. I’m also not going to draw anything as pretty or make slick videos. Sorry. I have code and app building posts to write 🙂

What I will do is try to lay out my thinking for how I would tackle the same problems Joseph discussed. And I’ll share some quick and dirty mockups of roughly how it would work. Maybe one of my old pals at MSFT (or whoever is running design in Windows now) will like something from it and be inspired. Or maybe they’ll hate it. Or maybe they’re already thinking along the same lines. Whatever the case, I think it could spawn an interesting discussion.

Disclaimer: I have not shared or discussed any of this with anyone from Microsoft, and have absolutely no knowledge of their plans other than being aware of the rumors documented on The Verge and other places in recent days. This is nothing more than my musings as an enthusiastic user, developer, and wannabe designer.

So let’s begin with a little background from my perspective…

Simplicity versus capability

By and large, the world of computing UX is dominated by two approaches.

Apple uses both approaches. They ship the iPad with a simple UX, and the Mac with a powerful one. They’re largely unrelated experiences. One is not a scaled down or scaled up version of the other. They were designed independently, with vastly different goals.

Along the same lines, Windows Phone (7+) and Windows 7 easily fit into the same respective buckets.

Windows 8 — Mashing things up

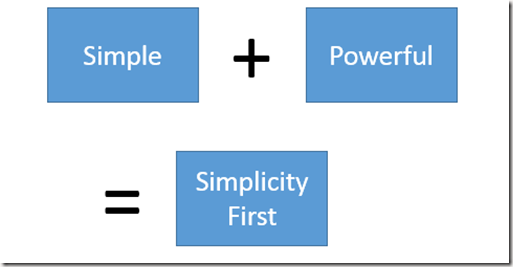

I believe the designers of Windows 8 saw value in both approaches and set out to build a UX with the best of both worlds. I think the formula they used was essentially this:

I don’t think this was a crazy idea. In fact, I think this exact concept is valuable in some situations. The problem, I think, is that it fails to address 100% of the existing “Simple” and “Powerful” buckets. And yet, Microsoft decided to pitch it (and deliver it) as the modern replacement for those concepts it decided were legacy. And frankly, I think there’s more market today outside of the segment “Simplicity First” can address, on either side.

My alternative — a scalable UX

Rather than billing a single Simplicity First UX as all you need, I think what we need is a scalable UX.

Some devices (and users!) lend themselves to simpler UX, and this should be embraced. The iPad is a great example of this. Many, many users (and pundits) find its limitations to be a feature. I think a truly unified UX needs to start here and be able to exist completely in this bucket.

At the other end of the spectrum, you have what I’ll call a “Capability First” UX. Apple has slowly (very slowly) started pushing Mac OS to fit here. Things like LaunchPad and full-screen mode give the user a way to toss off the complexity of their normal desktop environment when they’re in the mood. And the way they’re doing it involves bringing some elements of iOS into the Mac world.

Why no “Powerful only” segment at the top of the scale? I just don’t think it’s valuable. As is, each step in the scale is a superset of functionality, just prioritized differently.

Microsoft has already shown that the design language and UX model formerly known as “Metro” can scale from Simple to Simple First. Windows Phone is Metro in Simple mode. Windows 8 is Simple First.

Unfortunately, with Windows 8 and Surface, Microsoft missed two important things. First, they failed to address the market for a Simple UX on a device any larger than a phone. Second, they failed to deliver the Capability First UX that traditional PC users demand for their desktops and most laptops.

A note on Windows 8.1 and update rumors

Windows 8.1 takes some really tiny opt-in baby steps to addressing the Capability First crowd (mainly by allowing you to boot to the desktop). The benefit of that is something that, today, I find questionable. And it’s off by default, so hard to call it capability first.

The other change in 8.1 was the restoration of the Start button on the desktop. There are two small benefits to this. First, it partially solves the discoverability problem of the lower-left corner mouse trigger for getting to Start (I say partially because it doesn’t help when you’re not in the desktop!). But second, it lets users feel more rooted in the desktop, whereas 8.0 was all about Start being the root and desktop being essentially an app.

For an OS update that took a year, and has a lot of great new stuff (SkyDrive integration, vastly improved Metro window management, lots of platform improvements, etc), these changes felt more like reluctant low-hanging-fruit concessions than earnest investments.

However, rumor has it that the upcoming “Update 1” will push further in this direction. Given the timeframe, I expect there will still be a lot of seams as you move up or down the scale I proposed above. But for an update put together over a few months, the rumors seem compelling enough. More than their functionality alone (which I’m anxious to see firsthand), it’s promising to see the new Windows leadership taking real steps here, and doing it with such agility.

Of course, I still have reservations. In particular, about how they’ll pursue this apparent “back to the desktop” objective in future releases. Naturally, since this is my blog, I have some ideas to share about that…

Scaling Windows from Simple to Capability First

Here I’ll describe how I’d take Windows from Simple to Capability First. We’ll start at the top, which is where things change the most.

My Capability First model

Well let’s get the obvious out of the way. This is Windows, so our Capability First UI is going to start out like this:

I bet I know your first question. What happens when you click that Start button? Here’s where I get a little crazy. Instead of opening a Start “menu” or taking you to the Start screen, that button really becomes the “new window” button.

Quick reminder: These are quickly hacked together mock-ups to convey the idea. Don’t judge the details!

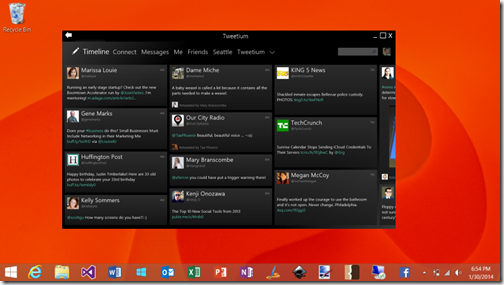

Clicking on that button gives you a new “blank” window. Well, not entirely blank. It shows you Start. In other words, all the things that window can become. It could show a revised version of Start with recently visited documents or links, but the core idea is that it’s an evolution of the Start we know from Windows 8.1 today.

Selecting an app, whether it’s a Store app or a desktop app, turns this window into that app’s window.

(Ignore the fact that it doesn’t have a taskbar entry, just another case of me being lazy with the mockups)

Reimagining windows, not Windows

Today, the user starts applications, and applications create windows. Sometimes they’re desktop windows, sometimes they’re “immersive” windows. They act differently, and the user really has no control over which sort of window they get.

What if we turned the paradigm on its head a bit and said that the user creates windows, and windows are an organizational construct for hosting apps (and documents, and web sites).

Instead of the desktop being an app inside of the immersive environment, the desktop would be a place where every window is its own immersive environment. It’s own workspace. Indeed, maybe we can distinguish this concept a bit and instead of just calling it a window, call it a workspace, or a workspace window.

That means it has its own “back stack.” It could also have its own tabs. If something about this feels familiar to you, it should. The ultimate evolution of this idea would unify the browser’s tab and navigation stack concepts with the window manager completely. I don’t see why one tab can’t have an app like Tweetium and another tab have www.amazon.com in it. How the browser has remained its own window-manager-within-a-window-manager for so long is puzzling to me.

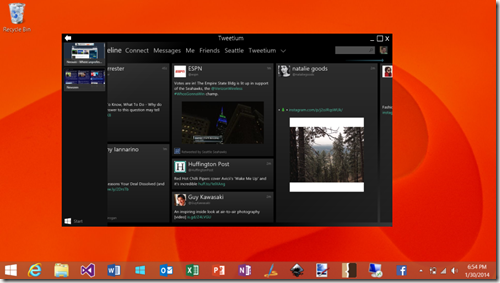

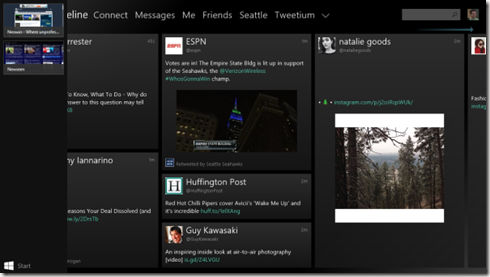

The idea then is that if you grab that window’s titlebar and drag it to the top of the screen, or you click the maximize button, you’re in today’s immersive mode.

Grab it from the top (as if you were going to close or rearrange it today) and you can restore it to some position on the desktop. Then you can grab another workspace window and maximize that, essentially taking you back to the “metro shell” but with just the windows in that workspace.

Since this is the same window manager we already know (and sometimes love) from the Windows 8 “Metro” environment, maybe we can do things like snap windows inside it.

Another cool feature of this would be the ability to pin a workspace arrangement to the taskbar. Then any time you clicked on that taskbar entry, you’d get that set of apps loaded into a workspace window. Maybe two are snapped side-by-side in it, or maybe you have Newseen and Tweetium in separate tabs of the same workspace window.

If we did have tab support in these workspace windows, then “New Tab” is basically Start.

(last reminder that this is about the UX concepts and interaction model, not the graphical design!)

Of course, just like the browser today, you’d be able to drag tabs between workspace windows, or out into their own new workspaces.

Could classic desktop windows participate in the tabbing and such? Maybe not. I can imagine several technical challenges there, though possibly solvable for at least some cases. But even if not, I don’t think that would be a problem.

Scaling it down

The model above shows how I’d love Windows to work on my desktop and laptop. On my tablet, though, I might want something different.

For a Simple First variation, you’d just boot to a maximized workspace showing Start. You can always take it into the desktop if you want, but that functionality is tucked away until you need it (which maybe is never).

On the simplest devices, like an 8 inch consumer-level tablet, you may want to lock the system to one workspace. Basically, today’s Windows 8.1 but without the desktop available at all.

What do you think?

I’d love to hear what others think of this UX model. Love it? Hate it? Have ideas about how to make it better? Or a better idea? Jump into the comment section and let me know 🙂

Before I dive into the overall architecture behind my existing apps, I thought I’d walk you through how I recommend building a brand new WinJS app.

First of all, I’m going to assume you have Visual Studio 2013. You can get the free Express version here. It includes a few templates for JavaScript Windows Store apps. I don’t find the UI templates useful, but I do make use of the navigator.js helper included in the Navigation App template, so I recommend starting with that.

Our first example app will be a simple UI over an existing REST API which returns JSON. In this case, primarily due to its public availability with no API key requirement, we’re going to use the Internet Chuck Norris Database’s joke API.

This post will go into verbose detail explaining the boilerplate code you get in the Navigation App template, and I’ll point out a few pieces I don’t like and think you should delete. If you’re already familiar with the basics of a WinJS app this may not be the most interesting thing you could be reading. On the other hand, any input on the format, or corrections/additions, are most welcome!

Creating our app project

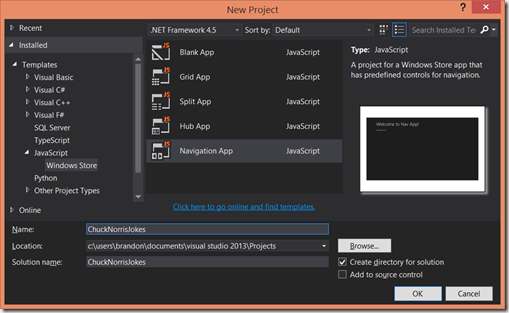

After starting Visual Studio, select the New Project option from the start page or file menu. You’ll get this dialog where you’ll choose the Navigation App template for a JavaScript / Windows Store app.

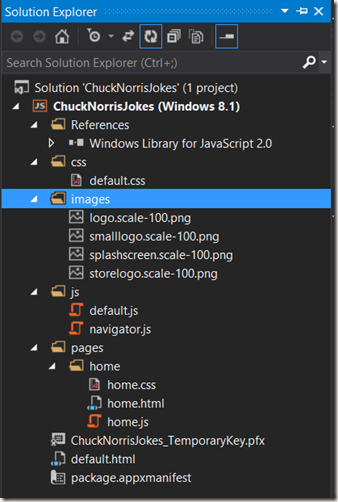

The result of using this template is that your app is filled out with a bit of boilerplate code and a basic file layout which looks like this:

What is all this stuff? Well let’s work top-down:

- A reference to the WinJS library. This means you can reference the WinJS JavaScript and CSS files from your project without actually including those files. The Windows Store will make sure the correct version of WinJS is downloaded and installed on the user’s machine when your app is downloaded (chances are it is already there, as most of the in-box apps use it).

- default.css, a file which contains some boilerplate CSS that is by default applied to every page in your app.

- A few placeholder images for your tile, splash screen, and the store.

- default.js which handles app startup and hosts the navigation infrastructure. I’ve always referred to this as the “MasterPage” in my code, though I may try to think of a better name in time for part 2.

- navigator.js which is kind of a funny thing. I don’t really know why it isn’t part of WinJS, especially in 2.0 (i.e. Windows 8.1). The actual navigation stack is part of WinJS, under the WinJS.Navigation namespace. What this file provides is a custom UI control (using the WinJS control infrastructure) that combines the WinJS.Navigation navigation stack with the WinJS PageControl infrastructure (which is essentially a mechanism for loading separate HTML files and injecting them into the DOM). I’ll explain this in more detail below.

- page/home directory. This directory contains three files which constitute a “page control” in WinJS lingo. This is the only page your MasterPage will host if you were to run this app right now.

- default.html — This is paired with default.js and default.css to define the “MasterPage” I described earlier. It’s the main entry point for the app.

- package.appxmanifest — The package manifest is an XML file which defines everything about how Windows deploys your app package when the user chooses to install it. It specifies your app’s main entry points (generally an executable or an HTML file), and also defines which permissions (or “capabilities”) your app requires, which app contracts it implements (“declarations”), and how your app should be represented throughout system UI like the Start screen (i.e. its name, tile images, etc). I will of course dive into this in more detail later as well.

So what does all this code do?

If you run this app right now, a few things happen:

- Windows starts wwahost.exe, the host process for JavaScript apps.

-You can think of this process as being a lot like iexplore.exe (the Internet Explorer process) but without any UI (“chrome”) elements, and designed to host true applications instead of for browsing web sites. - wwahost.exe loads the StartPage specified in your manifest, in this case, default.html from your app package.

- default.html triggers the loading of core files like default.css and default.js.

- The default.js code begins executing as soon as the reference in default.html is hit.

Scoping your code to the current file

Now, most of your JavaScript files are going to follow a pattern you’ll see in default.js. That pattern is:

(function () {

“use strict”;// Bunch of code

})();

JavaScript developers are likely familiar with this pattern (sometimes called the “module pattern”). There are two things to note here: the “use strict” directive, and the outer function wrapping your code. “use strict” is an ECMAScript 5 feature which tells the JavaScript engine to enforce additional rules which will help you write better code. For example, you will be required to declare variable names before you can use them.

The other piece is that outer function. Without this outer function definition, anything defined in “bunch of code” would be added to the global namespace. This encapsulation technique is widely used by JavaScript developers to scope code to the current file. You can then use methods I’ll describe shortly to expose specific things to broader scopes as needed.

Digging into default.js

When we last left our executing app, it was executing the code from default.js. So then what? Let’s break down the default.js code the template provided.

First you’ll see 5 lines like this:

var activation = Windows.ApplicationModel.Activation;

var app = WinJS.Application;

JavaScript doesn’t have a “using namespace” directive. In fact, it doesn’t necessarily have a concept of namespaces at all out of the box. Instead you have a global namespace with objects hanging off it, but some of the objects serve no purpose other than to segment other objects/properties/methods. In other word, de facto namespaces.

All WinRT APIs are exposed as a set of classes hanging off of of the Windows namespace (such as Windows.ApplicationModel.Activation in the above example). Furthermore, WinJS defines a helper for implementing the common JavaScript equivalent of namespaces, and uses that to expose all of its functionality. To avoid typing out the fully qualified namespaces all the time, commonly used ones can be referenced by defining variables as above.

app.addEventListener(“activated”, function (args) {

if (args.detail.kind === activation.ActivationKind.launch) {

Here the app registers a handler for the “activated” event. Activation is the word Windows uses to describe entry points for when the user invokes your application from anywhere else in the system. There are many kinds of activation, but the most basic “kind” is called “launch.” This is what happens when your app is started from its tile in Start, or when you hit “Go” in Visual Studio.

if (args.detail.previousExecutionState !== activation.ApplicationExecutionState.terminated) {

// TODO: This application has been newly launched. Initialize

// your application here.

} else {

// TODO: This application has been reactivated from suspension.

// Restore application state here.

}

Now we find some code that theoretically deals with the application lifecycle. I don’t like this approach, and I suggest deleting all that.

nav.history = app.sessionState.history || {};

This attempts to restore the navigation stack from history data saved before your app was terminated. I don’t like this either, so we’ll delete that too.

nav.history.current.initialPlaceholder = true;

This is telling the navigation stack that the current page shouldn’t contribute to the back stack. I don’t like this here either, so be gone with it!

// Optimize the load of the application and while the splash screen is shown, execute high priority scheduled work.

ui.disableAnimations();

var p = ui.processAll().then(function () {

return nav.navigate(nav.location || Application.navigator.home, nav.state);

}).then(function () {

return sched.requestDrain(sched.Priority.aboveNormal + 1);

}).then(function () {

ui.enableAnimations();

});args.setPromise(p);

Now things get interesting.

Okay, well, the disable/enable animations thing is an optimization to avoid unnecessary work while the system splash screen is still up. So maybe that’s not that interesting. I actually think it’s a little hokey, but we’ll leave it for now. However…

WinJS.UI.processAll is a very important WinJS function which tells it to process WinJS declarative control bindings in your HTML. In other cases you’d probably specify a root element, but in this case none is specified, so it starts at the root of of the DOM — which means processing the markup from default.html.

Essentially what this does is walk the DOM looking for data-win-control attributes, which specify that an element should host a WinJS control. For each one found, it invokes the associated JavaScript code needed to create the control.

This time, the only control it will find is this one from default.html:

<div id=”contenthost” data-win-control=”Application.PageControlNavigator” data-win-options=”{home: ‘/pages/home/home.html’}”></div>

This invokes code from navigator.js, where Application.PageControlNavigator is defined. It also deserializes the JSON string provided in data-win-options into an object and passes that as the “options” parameter to the Application.PageControlNavigator construction methods, along with a reference to the DOM element itself.

What’s all this .then() stuff?

WinJS.UI.processAll is an async method, and so its return value is a promise object. If you’re familiar with C# async, this is roughly equivalent to Task<T>. However, instead of representing the task itself, the idea is this object represents a promise to return the requested value or complete the requested operation.

The number one thing you can do with a promise object is to specify work to be done when the promise is fulfilled. To do this you use the promise’s .then method.

In this case, we tell processAll to do its thing, which results in instantiating the PageControlNavigator asynchronously. When it’s done, the code in the .then() block executes and we call nav.navigate to navigate to our app’s start page.

When that’s done, this boilerplate code requests that the WinJS scheduler (a helper for prioritizing async handlers and other work executed on the current thread) execute all tasks that we or WinJS have declared as being high priority. That’s another async task, and when it’s completed, we re-enable animations.

The args.setPromise line is really about the system splash screen. During app activation the system keeps the splash screen visible until after your “activated” handler has returned. However, we actually want to keep it up until we’re ready to show something, so the setPromise method lets you specify a promise which the splash screen will wait on. When it’s fulfilled, the splash screen will do its fade animation and your app UI will be shown.

The promise we pass is actually the return value of our last .then() statement after processAll. WinJS’s implementation of promises supports a very handy chaining feature which is relevant here. Every call to .then() returns another promise. If the function you provide as a completion handler to .then() returns a promise, then the promise returned by .then() is fulfilled when that promise is fulfilled.

Hopefully your head didn’t explode there. When first encountered, this can take a minute to wrap your head around, but I swear it makes sense =)

To try and help clarify that, let’s take another look at that part of the code with some of the parameters stripped out and a few numbers labeling the approximate sequence of events:

var p = ui.processAll().then(function () { // 1

return nav.navigate(); // 3

}).then(function () {

return sched.requestDrain(); // 4

}).then(function () {

ui.enableAnimations(); // 5

});args.setPromise(p); // 2

- So our first step is that processAll kicks off an async task and returns a promise.

- The very next thing that happens is the args.setPromise call. Then our activated handler runs to completion.

- Later on, when the processAll task completes, its promise is fulfilled, and we run the handler that invokes nav.navigate. That call itself returns another promise, which bubbles out to the promise returned by the .then() it handled.

- Only after that task is complete, do we run the next handler, which calls requestDrain. Again, this returns its own promise.

- When that completes, we’ll run the code to re-enable animations.

- When that returns, the whole chain will finally be complete, and “p” will be be fulfilled. Only then will the splash screen dismiss.

Re-read that if you need to. If you haven’t been exposed to promise-based async development before, I can imagine it taking a few minutes to process all that. Once you figure out the promise model, you’re pretty much good to go.

If you want to go read more about promises, some helpful links @grork suggested are:

MSDN / Win8 App Developer Blog: All about promises (for Windows Store apps written in JavaScript)

MSDN: Asynchronous programming in JavaScripts (Windows Store apps)

MSDN Blogs / The Longest Path: WinJS Promise Pitfalls

Got it. What about the rest of default.js?

Our default.js file has just a little more code we should deal with:

app.oncheckpoint = function (args) {

// TODO: This application is about to be suspended. Save any state

// that needs to persist across suspensions here. If you need to

// complete an asynchronous operation before your application is

// suspended, call args.setPromise().

app.sessionState.history = nav.history;

};

This is using the “oneventname” shorthand for registering an event handler for the “checkpoint” event. As the template comment suggests, this event indicates the app is about to be suspended, and that it should immediately release any resources it can, and persist any information it may need the next time it’s started, if the system decides to terminate it while suspended.

I’ll cover more on suspension and the app lifecycle later. For now, I suggest deleting the boilerplate code in this handler, but keep the handler itself around as it will be useful later.

Finally, we come to the last bit of default.js code:

app.start();

Up until now, the code in this file has been defining functions but not actually executing them. This line kicks off WinJS’s app start-up infrastructure, which ultimately results in the “activated” handler we described above being invoked, after the rest of the default.html page has been loaded.

Our trimmed down default.js

If you followed my suggested deletions, and also delete the comments inserted by the template, your default.js should now look like this:

(function () {

“use strict”;var activation = Windows.ApplicationModel.Activation;

var app = WinJS.Application;

var nav = WinJS.Navigation;

var sched = WinJS.Utilities.Scheduler;

var ui = WinJS.UI;app.addEventListener(“activated”, function (args) {

if (args.detail.kind === activation.ActivationKind.launch) {

ui.disableAnimations();

var p = ui.processAll().then(function () {

return nav.navigate(Application.navigator.home);

}).then(function () {

return sched.requestDrain(sched.Priority.aboveNormal + 1);

}).then(function () {

ui.enableAnimations();

});args.setPromise(p);

}

});app.oncheckpoint = function (args) {

};app.start();

})();

Okay, I cheated and also removed the “nav.location || “ and “, nav.state” parts of what was being passed to nav.navigate. Since we deleted the code that restored that from sessionState, we don’t need them.

Onto home.js

Now that we’ve gone over the basic bootstrapping done in default.js, we can take a look at our home page. The only interesting part right now is actually home.html.

lt;!DOCTYPE html>

<html>

<head>

<meta charset=”utf-8″ />

<title>homePage</title><!– WinJS references –>

<link href=”//Microsoft.WinJS.2.0/css/ui-dark.css” rel=”stylesheet” />

<script src=”//Microsoft.WinJS.2.0/js/base.js”></script>

<script src=”//Microsoft.WinJS.2.0/js/ui.js”></script><link href=”/css/default.css” rel=”stylesheet” />

<link href=”/pages/home/home.css” rel=”stylesheet” />

<script src=”/pages/home/home.js”></script>

</head>

<body>

<!– The content that will be loaded and displayed. –>

<div class=”fragment homepage”>

<header aria-label=”Header content” role=”banner”>

<button data-win-control=”WinJS.UI.BackButton”></button>

<h1 class=”titlearea win-type-ellipsis”>

<span class=”pagetitle”>Welcome to ChuckNorrisJokes!</span>

</h1>

</header>

<section aria-label=”Main content” role=”main”>

<p>Content goes here.</p>

</section>

</div>

</body>

</html>

Again there are some things I think you should delete. They are:

- The outer html, body, charset, and <title> tags are just utterly unused. The reasons will become clearer when I get to describing the fragment loader in a later post. In essence this code is dead on arrival and just shouldn’t be here.

- The WinJS references are redundant. They’re already loaded by default.html, and serve no purpose here. At best this will be optimized to a no-op, at worst it’s going to run redundant code.

- Default.css is the same story. No idea why the template puts this here, even MSDN advises you not to do this exact thing.

- The back button. This may seem odd, since I did tell you to choose a Navigation app. The reasons for this will become more clear as we evolve the app template. Suffice to say that having the navigator capability can be useful regardless of whether you actually expose a back button. And it’s nice to have the option to expose later if you want to, or on specific pages where it’s more useful.

When we boil it down to the functional parts, the entire file looks like this:

<link href=”/pages/home/home.css” rel=”stylesheet” />

<script src=”/pages/home/home.js”></script>

<div class=”fragment homepage”>

<header aria-label=”Header content” role=”banner”>

<h1 class=”titlearea win-type-ellipsis”>

<span class=”pagetitle”>Welcome to ChuckNorrisJokes!</span>

</h1>

</header>

<section aria-label=”Main content” role=”main”>

<p>Content goes here.</p>

</section>

</div>

An important thing to remember is that this is not meant to be a complete HTML file. We never intend it to be. It’s a fragment of HTML which will get loaded into the document described in default.html. That’s why that outer code is superfluous. We already did that stuff in default.html.

What’s left should look fairly straightforward. We load the mostly empty JS and CSS associated with this page, and then define a header and body with some placeholder content.

Let’s make this an app!

We’re going to start very simple and build out from there. For this first part of the mini-series, our goal is simply to display a random joke from the Chuck Norris joke database.

To do that, we actually need very little code. Let’s start by changing home.html to provide us a place to render the joke text:

<link href=”/pages/home/home.css” rel=”stylesheet” />

<script src=”/pages/home/home.js”></script>

<div class=”fragment homepage”>

<header aria-label=”Header content” role=”banner”>

<h1 class=”titlearea win-type-ellipsis”>

<span class=”pagetitle”>Chuck Norris joke of the day!</span>

</h1>

</header>

<section aria-label=”Main content” role=”main”>

<p id=”jokeText”></p>

</section>

</div>

The only spots I changed are in bold. The first is just a cosmetic change to our header. The latter removes the placeholder text, and assigns an id to the paragraph element so we can reference it later.

Now if we jump over to home.js, you’ll find this:

(function () {

“use strict”;WinJS.UI.Pages.define(“/pages/home/home.html”, {

// This function is called whenever a user navigates to this page. It

// populates the page elements with the app’s data.

ready: function (element, options) {

// TODO: Initialize the page here.

}

});

})();

Again, you can delete the template comments after you’ve read them once. To display our joke we’re going to do three things:

- Make a request to the API endpoint at http://api.icndb.com/jokes/random/

- Parse the response to find the joke text.

- Assign that to the innerText property of our jokeText element.

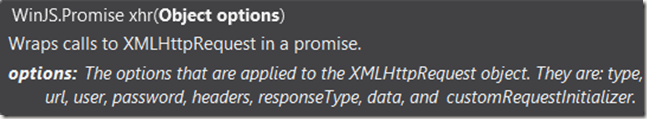

To make our request we’re going to use the standard JavaScript XmlHttpRequest mechanism, but we’ll call it via the handy WinJS.xhr wrapper. WinJS.xhr is great because it takes a simple property bag of options and gives you back a promise for the result of the request.

When you type WinJS.xhr( into Visual Studio you’ll get a handy IntelliSense description:

As the default request type is GET, we only really care about the url field, so that’s the only option we’ll populate.

(function () {

“use strict”;WinJS.UI.Pages.define(“/pages/home/home.html”, {

ready: function (element, options) {

var jokeTextElement = element.querySelector(“#jokeText”);

return WinJS.xhr({

url: “http://api.icndb.com/jokes/random/”,

}).then(function (response) {

// response is the XmlHttpRequest object,

// ostensibly in the success state.

try {

var result = JSON.parse(response.responseText);

}

catch (e) {

debugger;

}if (result && result.value && result.value.joke) {

jokeTextElement.innerText = result.value.joke;

}

else {

jokeTextElement.innerText = “The server returned invalid data =/”;

}

}, function (error) {

// Error is the XmlHttpRequest object in the error state.

jokeTextElement.innerText = “Unable to connect to the server =/”;

});

}

});

})();

As you can see, making an HTTP request is super simple. Once we have the response text, a simple call to JSON.parse will turn it into a JS object we can inspect and make use of. If the response text is missing or not in valid JSON format, the parse call will throw an exception, and we’ll print a message that the server has returned invalid data. We also inspect the returned object for the fields we’re looking for. If we didn’t do this, and just used result.value.joke where “value” was missing, that line would throw an exception (essentially the JS equivalent of an Access Violation). If “value” were there but its “joke” property were missing, that would result in printing the text “undefined” to the screen. Since that’s not pretty, we check for the presence of those fields and print a slightly nicer error, if that case ever were to occur.

The second function we pass to .then() is an error handler for the promise. If anything in WinJS.xhr throws an exception, it will get captured by the promise and handed to us here. To keep this example simple we don’t bother inspecting the error. But in the real world you’ll often want to have more elaborate error handling than that.

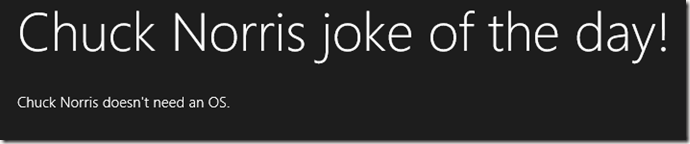

Now when we run the app, we get this!

That’s the first one I got. Seemed appropriate enough 🙂

What’s next?

In part 2 we’ll look at using WinJS templating and databinding to display more than one joke at a time. So check back soon! Oh, and please use the comment section to let me know if this is helpful, if the format works for you, and if you have any questions about the content of the post. I fully understand that this first post spent a lot of time on some rather basic concepts for anyone already working with WinJS (and likely for many using similar JS libraries). I promise the content will get more interesting for you folks soon, after I’ve hopefully helped get the less experienced folks up to speed.

This post isn’t strictly part of the “Building Great Apps” series, but it will be referenced there, and since someone was asking about this helper on Twitter I decided to go ahead and post it here.

In Windows 8.1, a new WinRT API was introduced for making HTTP requests. The main runtime class used is Windows.Web.Http.HttpClient.

There are several ways in which this is superior to the standard XmlHttpRequest interface. Unfortunately, for those using WinJS.xhr() today, converting your code to use HttpClient is non-trivial.

In my case, I had to convert all of my XHR calls to use HttpClient to work around a bug in XHR… A deadlock which occurs in IE/Windows’ XHR implementation when used simultaneously from multiple threads (i.e. the UI thread and one or more WebWorkers). I reported the issue to Microsoft and they identified the source of the bug, but rather than wait for them to issue a fix for it, I decided to migrate all my code to HttpClient which doesn’t have this problem.

In some cases, like my Twitter Stream API consumer, I rewrote the whole thing using HttpClient, because it made more sense, and the new implementation is superior in several ways. The original implementation did use WinJS.xhr, but it was a heavily… customized usage.

However, the rest of my code used WinJS.xhr in pretty standard ways. Rather than rewrite any of it, I decided to just create a drop-in replacement for WinJS.xhr which wraps HttpClient. Better yet, it should detect when WinRT is not available (i.e. if running on Windows Phone 8, iOS, etc), and fall back to the regular WinJS.xhr implementation.

I posted the helper up here as a GitHub Gist:

https://gist.github.com/BrandonLive/8641828

To use it, include that file in your project, and then replace calls to WinJS.xhr with BrandonJS.xhr.

One caveat is that I couldn’t find a way to inspect a JavaScript multipart FormData object in order to build the equivalent HttpMultipartFormDataContent representation. You should still be able to use the helper, you’ll just need to create one of those instead of a FormData object and pass that as the request data in its place.

If you find any problems or want to submit any improvements, please do so on the Gist page!

My MVVM rant

As I said in the last post, XAML fans tend to favor the MVVM pattern. I’ve had only limited experience with this, but so far I’m personally not a fan. Let me be blunt. The MVVM projects I’ve seen tend to be over-engineered feats of data-binding hell. If you’ve ever thought, “those bits of ‘glue’ code I have to write are kind of annoying, so why don’t I spend a bunch more time making even more of it,” then this may be the option for you.

Okay, maybe that’s not fair. I’m sure some people make great use of MVVM. Others use the MVC pattern with XAML just fine. Both patterns have the same basic goals of separating your UI stuff from your other stuff, and theoretically making it easier for non-developers to do UI work, or to use fancy tools like Blend to do their UI work, or to build different UIs for different target environments (i.e. adapting to different screen sizes, or making your app feel “at home” on systems with different design conventions).

Personally, I don’t buy it. I get the vision, but I’ve never seen it work. I’ve seen projects crumble under the weight of unnecessary code supporting these patterns, and I’ve seen a lot of cases where development teams start out with a grand MVC vision and end up with a puzzling mash-up of excess components, plumbing gymnastics, and endless cases where someone was in a hurry and just bypassed the whole damn abstraction.

And best of all, I’ve never seen the proposed benefits actually bear fruit. Designers don’t write XAML, and most of these projects end up with exactly one view implementation. Am I wrong? Tell me in the comment section 🙂

Maybe the problem isn’t so much the pattern itself, but the scope at which it’s applied. The projects I’ve seen struggle tend to try and carve these horizontal delineations across an entire codebase. As you’ll see in later Building Great #WinApps posts, I don’t take this approach. While I do separate the UI from the data, I tend to think of the result more as a set of vertically integrated controls, with the necessary interfaces to be composed with each other as the project requires. Maybe it’s just a pedantic philosophical difference. Or maybe you MVVM fans just won’t like the way I build things. Keep following the series if you want to find out!

/rant

One of the greatest things about developing for Windows can also be a challenge. On competitive platforms you can find options. On Windows, you’re given options. And, well, many of them. I’m going to start with a quick rundown of how I see the competitive environments, then dive into your options on Windows 8/RT. Feel free to skip ahead if that’s all you’re interested in finding.

iOS

On iOS the only first-class platform you have available is Objective C with Cocoa Touch. To a C++ (+JS / C# / etc) developer like me, examples of this code might as well be written in Cyrillic. That’s just at first glance though, I expect it’s not that hard to pick up. Weird as it may look.

Of course, you do have other options. They’re just not ones Apple cares about. Or in some cases, options they actively try to punish you for choosing. The two that come to mind for me are HTML/JS apps where you package up your code and mark-up into a native package and host it in a WebView (maybe using something like PhoneGap), or Xamarin. Okay, maybe Adobe has some thing too. If you care about that, you know about it.

HTML apps can, of course, call out to their Objective-C hosts, which lets you write some platform-specific, optimized native code when it is called for. It’s just clumsy.

Android

Android developers are roughly in the same boat, in that you get one real first-party option: Java, with a proprietary UI framework. For someone like me, this gives the advantage of having a familiar looking and working language (I tend to think of it as “lesser C#”). They do offer the NDK which I understand lets you write native C/C++ code if you want to avoid GC overhead and other Java pitfalls, though I think you’re still required to use Java to interact with the UI framework. Also, its use seems to be discouraged for most situations.

Otherwise, the same alternatives as iOS apply (HTML/JS, Xamarin, maybe some Adobe thing if you’re that sort of person). I actually don’t know if they hinder JS execution speed in the way that iOS does, but they certainly don’t do anything to make HTML/JS apps easy or efficient. In fact, my understanding is that it’s actually substantially harder to do this on Android than on iOS, primarily because of their fragmentation problem. CSS capabilities vary hugely from one version of Android to the next. And if you want to target the majority of Android users, you need to be able to run on a pretty sad and ancient set of web standards and capabilities. Ironic from the company that makes Chrome OS, but that’s reality.

Windows Phone 8

Windows Phone 8 is actually quite a lot like Android in this regard. By default you get first-party support for a managed language (C#) with a proprietary UI framework and some ability to write native C++ code if you wish.

Once again, you can write HTML/JS apps (and of course Xamarin could be described as either “at home” or maybe just “not applicable” here). HTML/JS apps here are a lot like those on Android, with a few advantages:

1) Every user has the same modern IE10 rendering engine with great support for CSS3 and some things that even desktop Chrome still doesn’t have, like CSS Grid (which is a truly wonderful thing).

2) JS runs efficiently and I believe animations can run independent of the UI thread.

3) Since the engine is the same as desktop IE10, you can build, test, and debug against that, which means you don’t have to deal with the weight of the phone emulator for much of your development process.

In other ways it’s more rough. There’s no real way to debug or DOM inspect the phone or even the emulator. I think iOS and Android may have some advantages in that particular regard.

Mobile web app blues

On each of the above platforms, web apps face various problems. On at least iOS, your JavaScript runs at about 1/10th speed, mostly because Apple hates you. Err, wants you to use Objective C and Cocoa Touch.

Some common problems are:

A) You need to write a host process. On Android and WinPhone this is in Java or C#, which means you do have two garbage collectors and two UI frameworks to load up. Though fortunately the CLR/Java garbage collector shouldn’t have much of anything to actually do.

B) The WebView controls on these platforms (including WP8) tend to suck at certain things like handling subscrollers. They’re less common on the web, but immensely useful for app development.

C) None of them give you direct access to the native platform, outside of what’s in their implementation of HTML5 / CSS3 standards.

Windows 8

Windows 8 (/8.1/RT) is different. Here you actually get first-party options. In fact, not only are there options for programming language and UI framework, but you can mix and match many of them.

UI Frameworks

| Direct3D | XAML | HTML | |

| UI languages | C++ | C++ C# |

JavaScript |

| Backend languages | C++ C# |

C++ C# (JS, theoretically) |

JavaScript C# C++ |

That’s a lot of possible combinations, and the paradox of choice very much comes into play. What’s great is that a lot of developers can use tools, languages, and UI frameworks they’re familiar with. What’s not so great is that the number of choices, and understanding how each of them work, can be overwhelming.

What is WinRT?

WinRT, or the Windows Runtime, describes a set of APIs exposed by Windows to any of the languages described above. The beauty of this is that when a team at Microsoft decides to offer functionality to Windows developers, they define and implement it once, and the WinRT infrastructure “magically” makes it available in a natural form to C++, C#/.NET, and JavaScript code running in a Windows 8 app.

I’ll dive more into what the Windows Runtime is and how it works in a later post, if there’s interest. Though I believe there are already articles around the web already which describe it well enough.

Suffice to say that WinRT APIs are not designed to be portable and are not part of any kind of standard. These are equivalent to the native iOS and Android APIs, which let you do things like access file storage, custom hardware devices, or interact with platform-specific features like the Share charm, live tiles, and so on.

So then what is WinJS?

Despite some misconceptions I’ve heard, WinJS is not a proprietary version of JavaScript, or anything at all like that. Its full name is the Windows Library for JavaScript, and it’s just a JS and CSS library. Literally, it’s made up of base.js, ui.js, ui-light.css, and ui-dark.css. Those files contain bog standard JavaScript and CSS, and you can peruse them at your choosing (and debug into them, or override them via duck punching, when needed). It’s a lot like jQuery, and offers some of the same functionality, as well as several UI controls (again, written entirely in JS) which fit the platform’s look and feel.

Technically you don’t need to use any of it in your JS app, but there are a few bits you’ll almost certainly want to use for app start-up and dealing with WinRT APIs (i.e. WinJS’s Promise implementation, which works seamlessly with async WinRT calls). The rest is up to you, though I find several pieces to be quite useful.

I’ll dig into WinJS and the parts I use (and why) in a later post. But hopefully that summary is helpful here.

Got it, so which option should I choose?

That table above suggests a sizable matrix to evaluate. I wrestled with how to best present my own boiled down version of this (I’ve in fact just rewritten this whole section three times). I looked at presenting this in a “If you’re an X, consider Y and maybe Z” format. But that’s challenging, particularly as someone who came into mobile app developing having had differing degrees of familiarity with a variety of platforms.

Instead I’m actually going to focus on the merits of each option I present, and call out how your background might factor in as appropriate. First up is…

C++ and Direct3D

This is the “closer to the metal” option and most appropriate for high-performance 3D games. It’s also a perfectly valid option for 2D games, especially if you’re already familiar with C++ and/or D3D, or even OpenGL. This is one of the easier options to rule in or out. It gives you the best performance potential, but is likely the most work, the most specialized, and least portable to non-MS platforms (relative to the other options here).

C++ and XAML

First off, if you’re a C/C++ developer and you’ve hated that for ages now Microsoft has kept its best UI frameworks to itself (or reserved them for those crazy .NET folks), rejoice! Beginning with Windows 8, Microsoft has realized that some people like C++ but also enjoy living in this this century with things like an object-oriented control and layout model and markup-based UI.

If you choose this option, your code will run faster than if you wrote it in C# or JavaScript. It just will. You get a modern UI framework with a solid set of controls and good performance. Itself fully native C++ code, the XAML stack renders using Direct3D, supports independent (off-thread) animations, and integrates with Windows 8’s truly great DirectManipulation independent input system. In Win8.1 I think it may even use some DirectComposition and independent hit testing magic that JS apps have had since 8.0. Maybe.

XAML fans tend to favor the MVVM pattern. Others use MVC with it, with varying degrees of success. Honestly, I’m not a huge fan of either. I know that’s blasphemy to a lot of people. I wrote a little rant here on the subject, but I’ll spare you and publish it as a separate post later. Maybe someone will read it and explain the error of my ways 😉

C# and XAML

Here you find basically the same thing as above, with these differences:

- It’s going to be slower. The largest impacts are:

- Start-up.

Loading the CLR and having it JIT compile your code adds start-up time you don’t have as a C++ app. - Garbage collection.

There’s just no getting around the fact that GC overall adds overhead and limits your ability to optimize.

Depending on what you’re doing, the performance difference from C++ may or may not be noticeable.

- Start-up.

- It’s going to be easier. Particularly if you don’t already know C++, and very much so if you already know C# or Java.

- It is for now the easiest way to share code with Windows Phone.

- It’s got killer tools, both in VS and extensions.

If you’re already a C# + XAML developer, this should be compelling. You get to use the language you (probably) love, the markup language you know, and a good chunk of the .NET API set you’re used to.

Yeah, there are some differences if you’re coming from WPF or Silverlight (or the phone version of Silverlight). It should still be an easy enough transition, and it’s cool that it’s a native code implementation and available to C++ developers (and thus now starting to be adopted inside Windows itself). Complain all you want about the N different versions they’ve confused you with over the years, but know that this is absolutely the version of XAML that Microsoft is taking forward.

JavaScript and HTML (and maybe some C++!)

If you know JavaScript, HTML, and CSS, this gets familiarity points. Contrary to some misconceptions, JavaScript apps on Windows are, in fact, real JavaScript. The major differences from writing a web app hosted in a WebView are:

- Windows provides the host executable, wwahost.exe. You don’t need to provide one. Your app is literally a zip file of JS, HTML, and CSS files, along with a manifest and any resources you choose to include (including, optionally, any DLLs of C# or C++ code you want to invoke).

- They’re faster, because the OS does smart things like caching your JS as bytecode at install time, and letting it participate in optimization services like prefetch and superfetch.

- You get direct access to the full set of Windows Runtime APIs.

- You can use the WinJS library of JS and CSS without having to directly include the files in your app (all apps share a common installation of it on the end user’s machine).

What if you’ve never used JS before? First off, forget anything you’ve heard about JavaScript. Since we’re talking Windows apps, you don’t have to worry about different web browsers, or ancient JavaScript limitations, or the perils of IE6’s CSS implementation. Yes, you’ll run into bugs your C++ or C# compiler would have caught, and refactoring can sometimes be a challenge. But you’ll also gain several benefits:

- A really productive, dynamic language which works really well with the web.

- A fast, lightweight, flexible UI framework which makes so many things fast and easy, as I’ll describe later.

- More portability. I’ll cover this more in a later post, but if you forego a few niceties not available on other platforms (i.e. my beloved CSS Grid), much of your app will be really easy to port to other platforms or the web. Yes, much more portable than Xamarin.

- Tons of documentation (hell, I reference MDN daily) and example code out on the web.

- Great tools. Both in VS (and Blend I hear, if you’re into that sort of thing), and from third parties. Seriously, you’ve got to love web-based tools like this awesome one for analyzing performance characteristics of different implementations.

- Lots of portable libraries like jQuery, KnockoutJS, AngularJS, and so on. Drop them into your app and go.

- You can use PhoneGap to make your app even more portable, as it wraps various WinRT call in a common wrapper that also works on WP8, iOS, and Android.

- If you don’t know it already, you’ll learn a valuable new skill for your repertoire, and one that’s not tied to any particular vendor’s whims.

You also get a few advantages from going with HTML over XAML:

- Start-up performance. Surprised? HTML/JS apps can actually start faster than equivalent C# + XAML apps. Well, on Windows anyway. Weird, right?

- UI performance. XAML got better in 8.1, but there are still several cases where the HTML engine is just better. Better at animations (within the realm of accelerated CSS3 animations and transitions), better at scrolling/panning, and better at other touch manipulations like swipe and drag+drop. In 8.0 the ListView control was faster (though maybe XAML caught up in 8.1).

- It’s super easy to consume HTML from web services, including your own. And because there are so many great tools for building and manipulating HTML outside of a browser, you can really easily write a server that passes down markup your app will render natively, without having to load a separate WebView.

There are other things, too. I like the WinJS async model better. You may not. I like the templating model. I like that everything including my UI is generally portable. I prefer CSS to the way XAML handles styling, and find it easier to write responsive UI (in the modern web sense of “responsive” — i.e. adapting to variable screen sizes) . I like that it’s being pushed forward by titans other than just Microsoft. Oh, and it has some built-in controls that XAML lacks.

Regardless of your background, if you’ve got a few days to experiment, I highly recommend taking up a project like writing a simple app in JavaScript and HTML. In fact, in a later post I’ll walk through one from the perspective of someone with little or no JS/HTML/CSS knowledge. You might just find it fun, and be surprised by the result.

Plus, if you’re writing anything performance intensive (for example, some custom image processing routines), or some IP you want to keep obscured from prying eyes, you can do that part in C++. Windows makes it incredibly easy to write a bunch of custom C++ code that crunches data and then return that to your JavaScript frontend. It’s kind of brilliant, and I highly recommend checking it out. I’m sure I’ll give an example of that at some point too.

There are downsides. Unfortunately, even in 8.1, WebWorkers don’t support transferable objects, so anything you pass between threads gets copied. You also can’t share a WebWorker among multiple windows (without routing through one of said windows). With C++ or C# you get more control over threading and memory. The advantage in JavaScript is you don’t have to worry about locking, as the platform enforces serialized message passing as the only cross-thread communication mechanism.

The real answer

Most apps (which aren’t 3D games) are going to use either C#+XAML or HTML+JS. Which one you choose will probably depend a lot on your background and personal preferences. Both platforms can be used to create great, fast, beautiful, reliable, maintainable apps. Both can let you be extremely productive as a developer. Both look to have bright futures.

Microsoft used JS apps for most of the in-box Windows apps. The Mail client in Windows 8.1, the Xbox apps, and the Bing apps (except Maps). The Xbox One uses it for, as far as I can tell, everything. In other words, it’s not going anywhere, even if some folks suggest otherwise.

My answer

In my case, I was primarily a C++ developer who was an expert in Microsoft’s internal UI framework, which I’d used for years. I had built WinForms C# apps many years ago, and dabbled in WPF and Silverlight here and there (even making a rough Twitter app in the latter at one point!). The most extensive experience I had with WPF was when I was at Microsoft and was loaned out to help a struggling team with their project. I found that WPF/XAML had a few neat tricks, but I didn’t exactly fall in love with it.

I’d also had a bit of experience with web development, but it was pretty limited. I’d customized some WordPress themes and written some little JS slideshow / flipper sort of things, but nothing sophisticated, and I really had only known just enough about the language to get the job done.

When I decided to build my first Windows 8 app, 4th at Square, I chose JS because I wanted my app to be fast, because I wanted the project to be a fun learning experience, and because I wanted to see what all the fuss was about. As my first app (which I haven’t touched in a while now) I won’t point to it as a pinnacle of design. Or, well, anything. I do use it nearly every day though. And it met all of my goals. It was fast, even on a first-gen Surface RT. It was fun and educational. And I found myself liking a lot about that way of building an app.

Building Great #WinApps: Introduction

Recently I’ve had a few requests to share my experiences building Tweetium, Newseen, Cattergories, and 4th at Square. I’ve decided to jump back into the world of blogging with a series I’m going to not-so-humbly title “Building great #WinApps.” This post serves as an introduction to the series.

What will I cover?

A bit of everything. Here’s a sampling of topics I have planned:

- Overview of the programming language and UI platform options

- What factors should you consider?

- Why did I choose WinJS?

- The Win8 JS app model (or, “What the heck *is* WinJS anyway?”)

- Building a multi-threaded JS app

- Nailing start-up performance

- Architecture overview for Newseen and/or Tweetium

- Designing the UX for Newseen and Tweetium

- Building Tweetium’s flexbox-based grid view

- Running a WinJS app on the web or other platforms

- Workarounds for platform bugs and idiosyncrasies I’ve encountered

- Debugging tricks and tips

Those are just some of the topics I’ve been thinking of writing about. There will certainly be others, and some of those may get merged or reframed a bit along the way.

Will there be code?

You betcha! I plan to post examples as well as some utilities I’ve created along the way. I expect this to be a beneficial exercise for me as I absolutely have things I want to refactor, and nothing motivates that like having other eyes on your code 🙂

Is this only applicable to Windows apps?

Nope! Or at least, some of the content will apply anywhere. Some posts will apply to any JavaScript app, others I expect will apply to any app at all. But for now the focus will be on the apps I’ve developed for Windows, and that’s the perspective from which I’ll be writing for the time being. Hence the #WinApps tag.

What about other aspects like design?

I’ve been doing the end-to-end design, development, and marketing of these apps, and I plan to share some of my experiences in each of these areas. That said, I’m not trained as a designer (or marketer, PR person, etc) and mostly picked up bits and pieces from working alongside some greats during my time at Microsoft. And of course learning by doing and iterating over the last several months.

I am proud to say my recent app designs have received a lot of praise, so I have some hope that my insights here will be helpful to some. At the very least I can hope to spark conversations with some actual designers!

How often will you post?

I’m going to try and share something every few days over the next several weeks. After I hit publish on this introduction I’m going to jump right into my first topic, which seems to be a popular one to discuss this afternoon 🙂

How do I follow along?

If you subscribe to this blog, you’re set! I’ll also tweet links to my posts so you can follow @BrandonLive. I’ll use the hashtag #WinApps which we used for a tweet-up a couple months ago (and which I hope we’ll be using for future events soon), so you can also watch for that.

Please be sure to comment! Questions, feedback, corrections, other perspectives… all are welcome and encouraged!

Now I’m off to write the first real post…

On Friday the latest B-side Software creation hit the Windows Store. It’s a new Twitter app which I initially started to build because I was frustrated with the official client and found the third-party alternatives severely lacking for my needs.

To learn more about it, visit the Tweetium website, or go straight to Tweetium in the Windows Store.

Richard Hay of Windows Observer did a little write-up about the release and posted this handy video walkthrough of v1.0:

The first app update is already awaiting store approval (list of fixes and new features here).

If you’re a Twitter user on Windows 8.1 (or Windows RT), check it out and let me know what you think!

Why I’m voting against I-522

If you don’t live in Washington state, you might be unaware of this year’s controversial ballot initiative, I-522. In short, it’s a law which proposes that some foods deemed as “genetically engineered” or containing “genetically modified organisms” must be labeled as such in grocery stores.

I first became aware of this initiative two or three months ago via a sign posted at my local PCC where I buy most of my groceries. It was endorsing the initiative. My first thought was, “Oh, I haven’t heard about a battle over genetically engineered/modified foods, but if there is one, labeling seems like it could be a reasonable compromise.” A moment later though, I thought, “What do they mean by genetically engineered? I hope the actual initiative is very specific about what’s labeled and why! It would be silly if every edible banana needed a label just because edible bananas don’t exist in nature.”

That was about the end of my thinking on the subject for quite a while. Then a month or so ago I began hearing more discussion about it, and seeing a lot of propaganda — all in support of the initiative. And all sponsored by PCC or Whole Foods. Finally, I saw an emotionally charged tweet with the hashtag #LabelGMOs and I replied to inquire why the tweeter considered this to be an important issue.

I decided to do some research to learn about the nature of the initiative and the facts and arguments applicable to the discussion. At first blush, I was unable to find anything to support the initiative. Well, nothing other than myths and emotional diatribes from non-experts with no sources to back up any of some fairly outlandish claims. On the opposing side I immediately found well-stated, sourced, logical objections from well-respected groups such as the American Association for the Advancement of Science who say, “Legally Mandating GM Food Labels Could ‘Mislead and Falsely Alarm Consumers’,” and the American Medical Association (PDF).

The AMA report’s conclusion is:

Despite strong consumer interest in mandatory labeling of bioengineered foods, the FDA’s science-based labeling policies do not support special labeling without evidence of material differences between bioengineered foods and their traditional counterparts. The Council supports this science-based approach, and believes that thorough pre-market safety assessment and the FDA’s requirement that any material difference between bioengineered foods and their traditional counterparts be disclosed in labeling, are effective in ensuring the safety of bioengineered food. To better characterize the potential harms of bioengineered foods, the Council believes that per- market safety assessment should shift from a voluntary notification process to a mandatory requirement. The Council notes that consumers wishing to choose foods without bioengineered ingredients may do so by purchasing those that are labeled “USDA Organic.”

Someone supporting the initiative then sent me a link to the World Health Organization’s FAQ on the issue, apparently without reading it. It echoes the sentiments of the AAAS and AMA. As do the European equivalents of these organizations, such as the OECD and EFSA. So what gives?

My next step was to look at the text of the initiative itself. Oddly enough, it seems many of the big sites promoting I-522 have links to read the text which are broken. Fortunately you can get the full thing from the WA Secretary of State site in PDF form here. As I read the text I was overwhelmed with incredulity, and here’s why:

1) It provides no context for its definition of “genetic engineering”

522 immediately begins throwing around the phrase “genetic engineering” with absolutely no effort to establish the scope of its meaning. As any biologist will tell you, generic engineering is not new and can be accomplished through a variety of means going back thousands of years. The earliest banana cultivars are believed to have been created between 5000 and 8000 BCE. The bananas and many other plants you and I eat today are hybrids which are initially sterile and then made fertile using polyploidization. Neat stuff, but not newsworthy.

Of course, most anti-GMO advocates will immediately cry that GE techniques like hybridization and artificial selection are not covered by their definition of “genetically engineered” or “genetic modification.” But the fact that the initiative makes countless claims using this term before making any effort to establish what it applies to is incredibly disconcerting.

Later, 522 establishes a definition of “genetically engineered” that is more specific. However, it gives no context regarding other forms of genetic engineering that exist, nor does it provide any basis for choosing the particular scope it has selected.

2) It cites no sources.

The initiative says:

Polls consistently show that the vast majority of the public, typically more than ninety percent, wants to know if their food was produced using genetic engineering.

Which polls? What question was actually asked and which options were given as answers? Why is this an argument for labeling? Everyone knows you can get ridiculous poll results by asking biased and uneducated groups questions which they’re ill-equipped to answer. Keep in mind that many polls also show nearly 90% of Americans don’t “believe in” evolution. You know, the thing that makes genetic engineering possible.

It also says:

United States government scientists have stated that the artificial insertion of genetic material into plants, a technique unique to genetic engineering, can cause a variety of significant problems with plant foods. Such genetic engineering can increase the levels of known toxicants in foods and introduce new toxicants and health concerns.”

Which scientists? Where did they say this? I scoured the web and literary databases and can’t find anything which looks like it would fit this description. How are they allowed to make claims like this without a single source? There are more examples of this which are just as outrageous.

3) The labeling it prescribes is not useful.

The initiative explicitly says that is does not require the identification of which components were genetically engineered nor how they were engineered. The only plausible argument for labeling which I’ve heard thus far is that “If a health issue is ever found in a GMO product, the label will help us avoid them.” But that’s not true, any more than a “grown with pesticides” label would help deal with an issue attributed to a specific pesticide.

This is because every GMO (even using the narrow definition the initiative eventually establishes) is completely unlike every other. There is absolutely nothing in common between them. All it says is that some modification is made. There are dozens of unique modifications in widespread use today, and undoubtedly new ones will come in time. If you really want to “know what’s in your food,” you’ll at least need a label telling you what was modified and which modification(s) were used.

4) It’s full of exemptions with no justification for their inclusion.

The bill exempts animal products (such as dairy and meat/poultry) where the animal was fed or injected with GE food or drugs. It exempts cheese, yogurt, and baked goods using GE enzymes. It exempts wine (in fact, all alcoholic beverages). It exempts all ready-to-eat food, such as the hot food bars at PCC and Whole Foods. It exempts all “medical food” (without defining the term). Particularly odd for a bill which claims to be looking out for health concerns.

So who wrote this thing anyway?

The author of I-522 is Chris McManus, an advertising executive from Tacoma. When asked about the details of the bill, he told Seattle Weekly:

“Well, you know, I’m not a scientist. I work in media. Those kinds of questions I’ll have to defer to later in the campaign.” (source)

How encouraging!

What does the science actually say?

My next task was to dig into actual scientific literature to see where the claimed health concerns were coming from, and to better understand the processes being used and research into their effects. Here’s what I found:

1) Currently marketed GMO products are safe

As the AMA, WHO, and others I linked to earlier called out, all research into existing GMO products has shown them to be as safe as their conventional counterparts. There have been zero cases of a health issue attributed to the presence of GMOs in food. (Source: Meta-study by Herman and Price)

2) Substantive equivalence is a useful tool

Government standards in the US and around the world require GMO products to establish “substantive equivalency” with their conventional counterparts and to identify and thoroughly test the effects of any deviation from this standard.

This is not to say that assessing the effects of GMOs is without challenges. However, researchers such as Harry Kuiper point out that many conventional foods contain some degree of toxic or carcinogenic chemicals and thus our existing diets have not been proven to be safe. This does mean that changes due to genetic modification could increase the presence of as-yet-unidentified natural toxins or anti-nutrients. However, this also means that positive changes can be missed just as easily. Bt Corn, for example, has been found to have lower levels of the fumonisins found in conventional corn (source).

More importantly, there is nothing anywhere to suggest that modern GMO techniques are more likely to result in such unintended and unidentifiable changes than traditional GE techniques or even “organic” cultivation. In fact…

3) Modern GMO techniques appear to be safer

More and more research is revealing that modern transgenic GMOs contain fewer unintended changes than result from traditional breeding and even environmental factors. (source, another source, another source).

Transgenics are GMOs produced by artificially transferring genes from a sexually incompatible species and tend to get the most attention from those objecting to genetic modification. Note that the term GMO also applies to cisgenic modifications (where the exact same methods are used to transfer genes from another of the same species or a sexually compatible one). I-522 makes no distinction between these concepts.

One reason GMO techniques appear to be safer is that they’re able to make more targeted changes. This illustration conveys a simplified view of these different GE methods:

Why do PCC and Whole Foods support I-522?